Arkhangelsky and Imbens review causal panel methods

Highly recommended readers read the new review

This is a great new review article that just posted about causal panel methods by Arkhangelsky and Imbens. You may recall that they, along with a few others, authored the synthetic difference-in-differences paper. I have done a slow skimming of the article twice and the things that struck me is how beautifully written it is. It takes like a “world building” approach and goes back to the 1980s work on panel models by people like Chamberlain, into difference-in-differences and program evaluation then to synthetic control. What was also nice I think was how it mirrored my own opinions and thoughts but that because it was Arkhangelsky and Imbens, it was deeper and able to interact with all parts of the technical elements. But I also just loved, to be honest, the history of thought embedded in it. Take for instance these sentences:

“This literature was initially motivated by the increased availability of various large public-use longitudinal data sets starting in the 1960s. These data sets included the Panel Study of Income Dynamics, the National Longitudinal Survey of Youth, which are proper panels where individuals are followed over fairly long periods of time, and the Current Population Survey, which is primarily a repeated cross-section data set with some short term panel features. These data sets vary substantially in the length of the time series component, motivating different methods that could account for such data configurations.”

I love hearing people describe the evolution of econometrics as endogenous to technological events such as the sudden availability of panel surveys. It’s interesting when you think about it that econometricians are responding to data, in other words. You could even say that about diff-in-diff maybe — that the US legal system is such that 50 states and countless municipalities are given relative large latitude to design their own laws, and that helped build the “natural experiment” style research. Had it not been that datasets existed harmonized over those 50 states, though, then you couldn’t really as easily work with such things.

But it’s also just so thorough, and I think it will bear close reading by everyone. You can see a particular notational setup that I think is more common now with Imbens and coauthors where things are expressed in terms of matrix “block structure”. This is how the matrix completion with nuclear norm regularization paper is set up, and that is sort of the framing they use in this review too. They call this the “data frame” of the panel. Here’s a brief quote:

“One classification the panel data literature can be organized by the shape of the data frame. This is not an exact classification, and which category a particular data set fits, and which methods are appropriate, in part depends on the magnitude of the cross-section and time-series correlations. Nevertheless, it is useful to reflect on the relative magnitude of the cross-section and time-series dimensions as it has implications for the relative merits of statistical methods for the analysis of such data.”

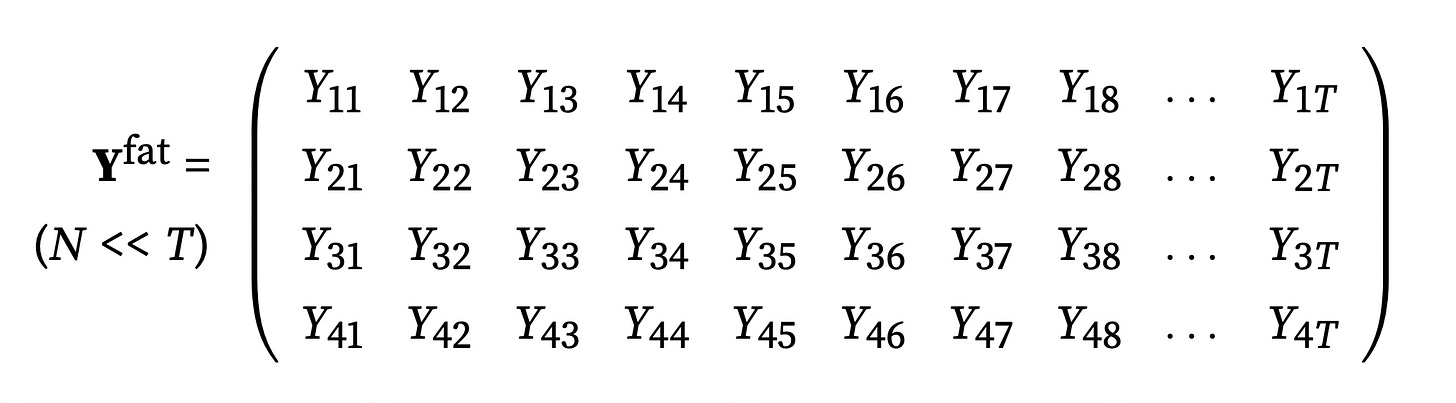

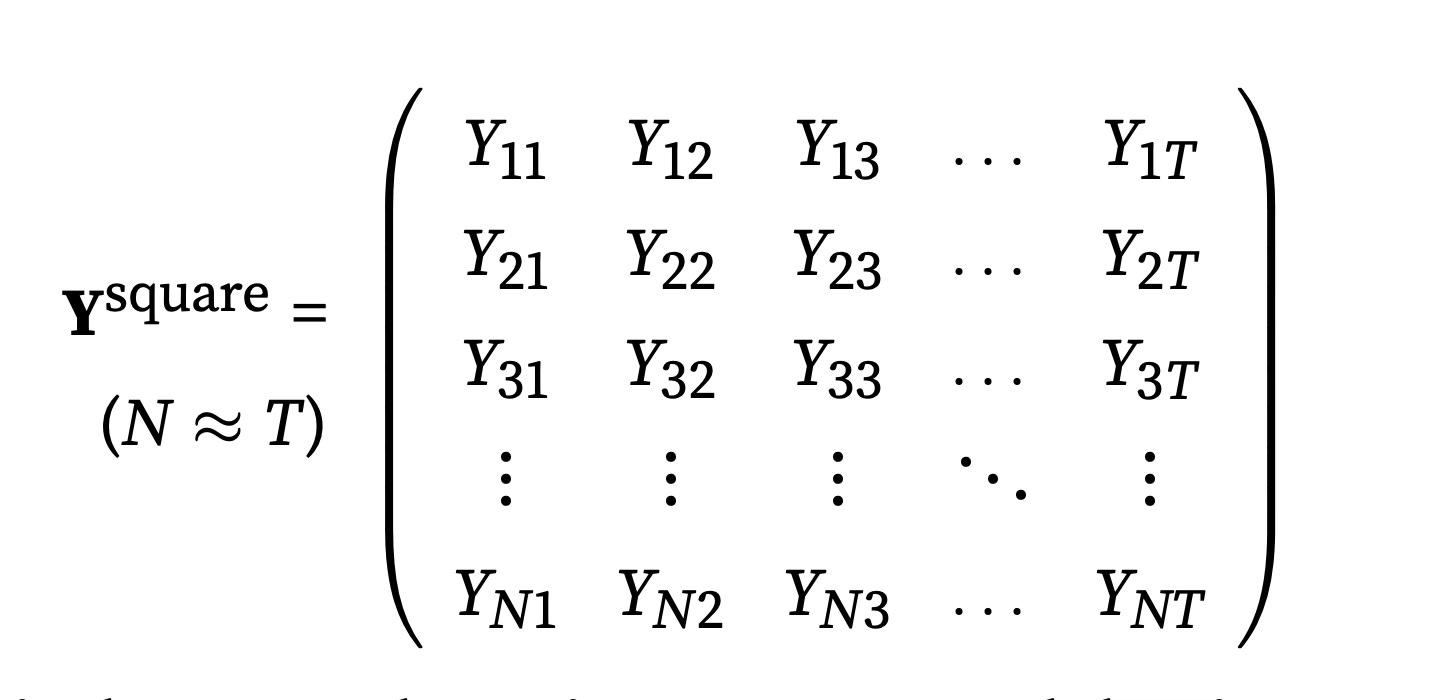

In the MCNN paper, and in this review, they describe three basic kinds of block structures too: thin, fat and square. I’ve put them here so you can see. They tend to talk about this because there are conventional research designs we’re used to that actually are one of these. So synthetic control, for instance, will often be long time periods (or are supposed to be) relative to units, but then something like the Lalonde dataset will have large number of units but only three periods. But oftentimes you’ll also have around the same number of time and units which they call square. This review carries that notation forward into the broader panel framework, and so I suspect we can probably expect to see more people using it.

Keep reading with a 7-day free trial

Subscribe to Scott's Mixtape Substack to keep reading this post and get 7 days of free access to the full post archives.