Claude Code 19: When the Reclassification Is Massive But the Trends Don't Change, Something Interesting Is Happening (Part 4)

Testing the marginal-cases hypothesis with a thermometer, and letting Claude Code run wild on datasets

In Parts 1 and 2, I showed you the setup and the punchline: gpt-4o-mini agreed with the original RoBERTa classifier on only 69% of individual speeches, but the aggregate trends — partisan polarization, country-of-origin patterns, the whole historical arc — were virtually identical. Over 100,000 labels changed and yet the original story didn’t.

That result was interesting at first, but then kept bugging me. How can you reclassify 100,000 speeches, more or less saying that the original RoBERTa model was wrong, and yet all subsequent analysis finds almost the exact same things? What does that even imply about measurement itself?

So yesterday I spent an hour working with Claude Code to extend the analysis by classifying the speeches a second time at OpenAI to test a conjecture. I had two conjectures in fact — that these speeches being reclassified were the “marginal speeches” and that they were canceling out because they were roughly symmetric from anti to neutral, and from pro to neutral. And I wanted to check if that was the case, did that therefore mean this was a special case of using one shot LLMs over human annotation w/ RoBERTa that applied when there was a built-in cancelation mechanism like there is with labels that are (-1, 0 and 1)? Would it work with 4 categories that don’t cancel out (e.g., race categories)?

So today I spent another hour with Claude Code trying to figure out why. I do not explore the last question in today’s video, but I note that Claude Code did web crawl until it found four new datasets with classified text that would let me evaluate the “three body problem”. But for this it’s just going to be everything else but.

Thank you for your support! This substack is a labor of love, and the Claude Code series remains free for the first several days. So if you want to keep reading it for free, just make sure you keep your eyes peeled for updates! But maybe consider becoming a paying subscriber too as it’s only $5/month, which is the price of a cup of coffee!

Jason Fletcher’s Question

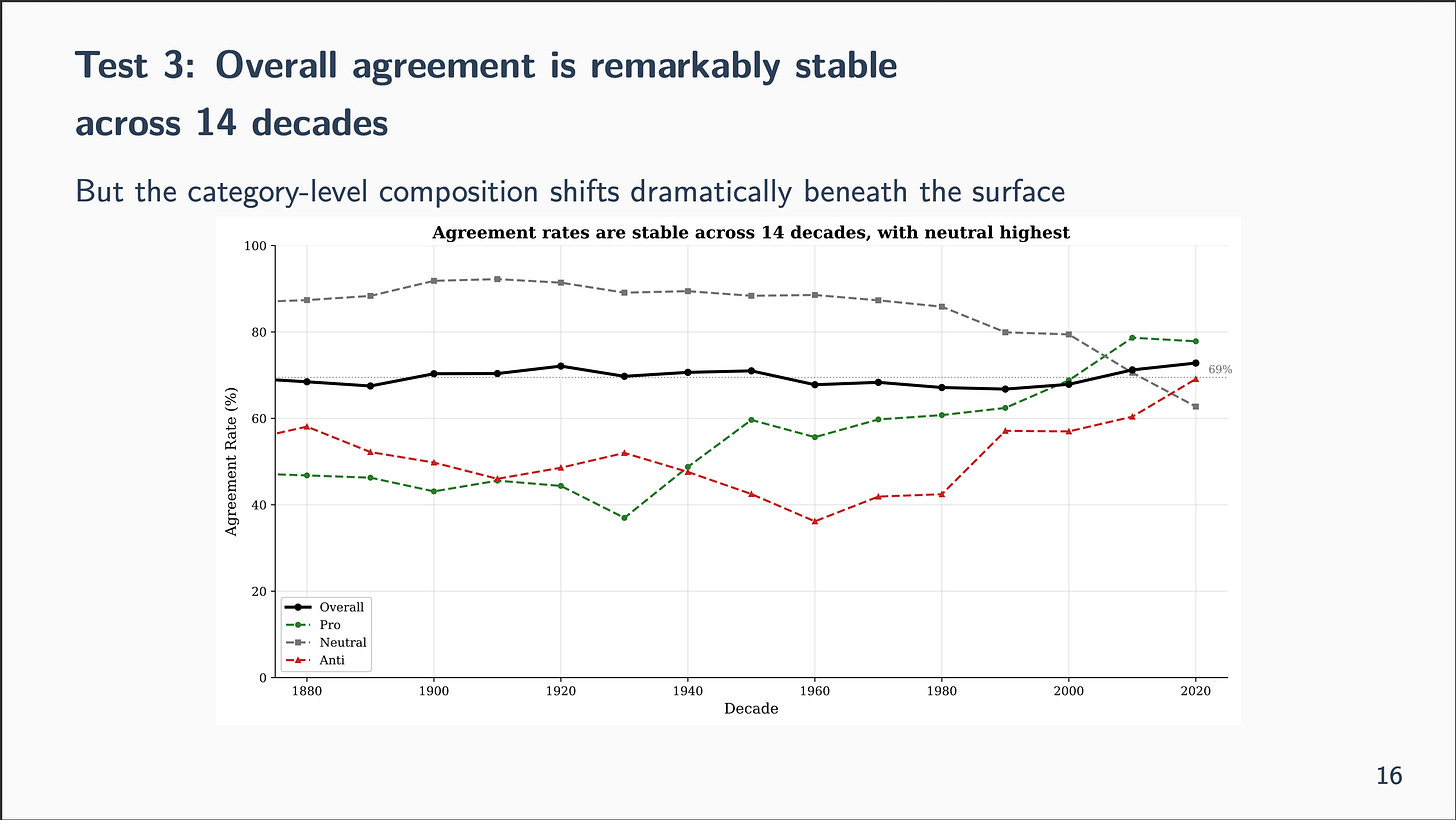

My friend Jason Fletcher — a health economist at Wisconsin — asked a good question when I showed him the results: does the agreement break down for older speeches? Congressional language in the 1880s is nothing like the 2010s. If gpt-4o-mini is a creature of modern text, you’d expect it to struggle with 19th-century rhetoric.

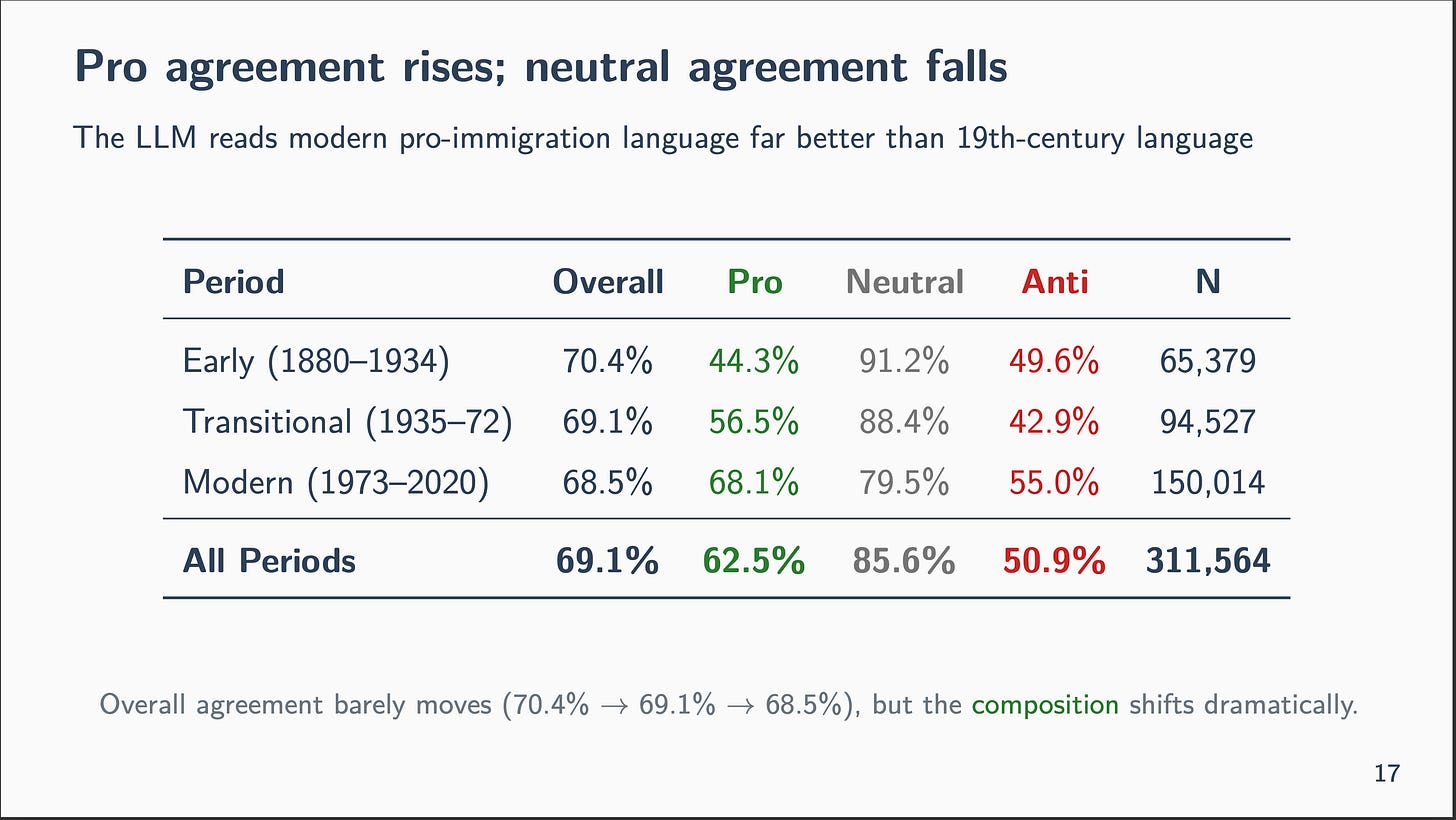

We built two tests. The surprise: overall agreement barely moves. It’s 70% in the 1880s and 69% in the modern era. The LLM handles 19th-century speech about as well as 21st-century speech.

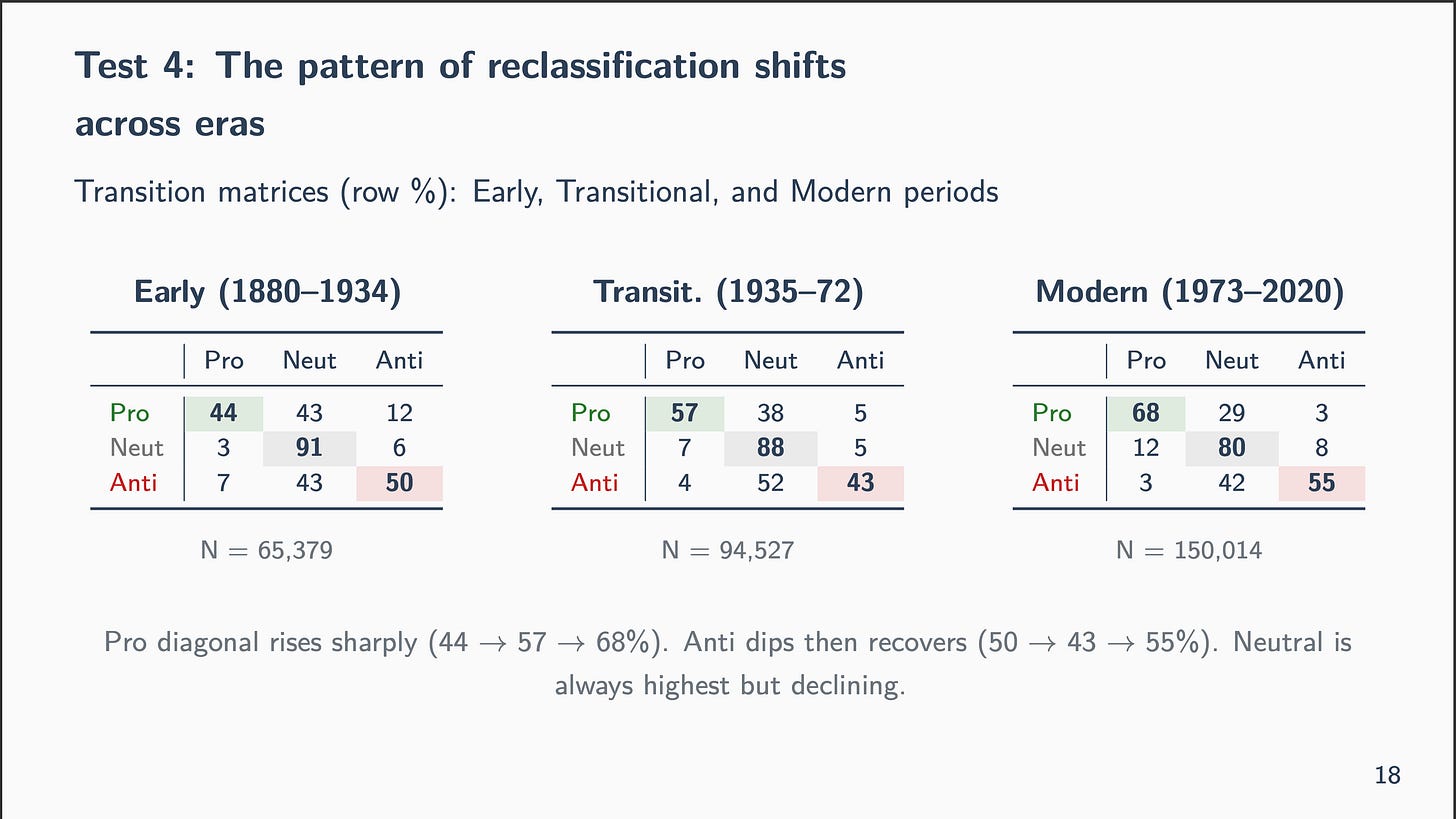

But beneath that stable surface, the composition rotates dramatically. Pro-immigration agreement rises from 44% in the early period to 68% in the modern era. Neutral agreement falls from 91% to 80%. They cancel in aggregate — a different kind of balancing act, hiding in plain sight.

You can find this “beautiful deck” here if you want to peruse it yourself.

My Conjecture: Marginal Cases

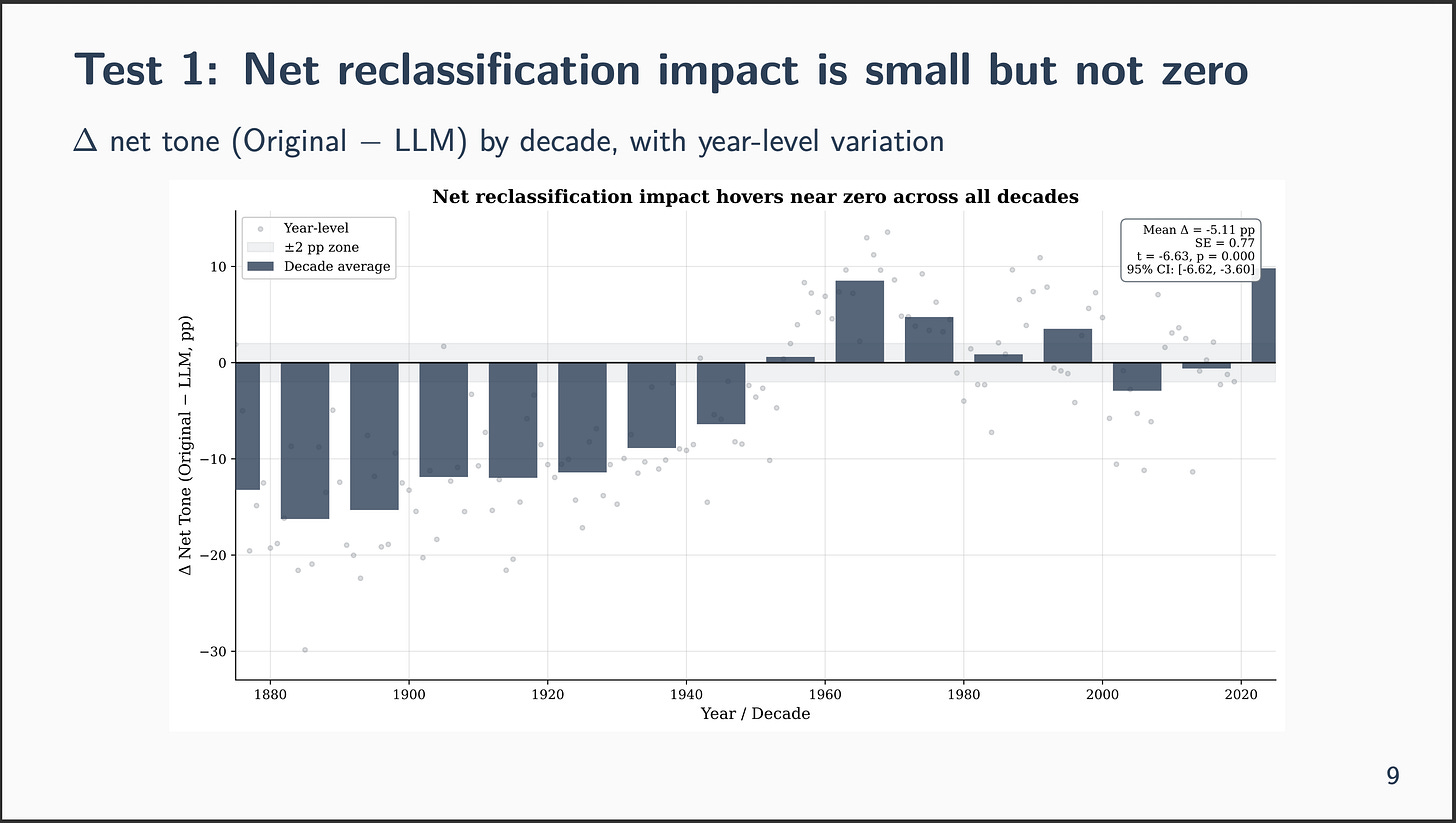

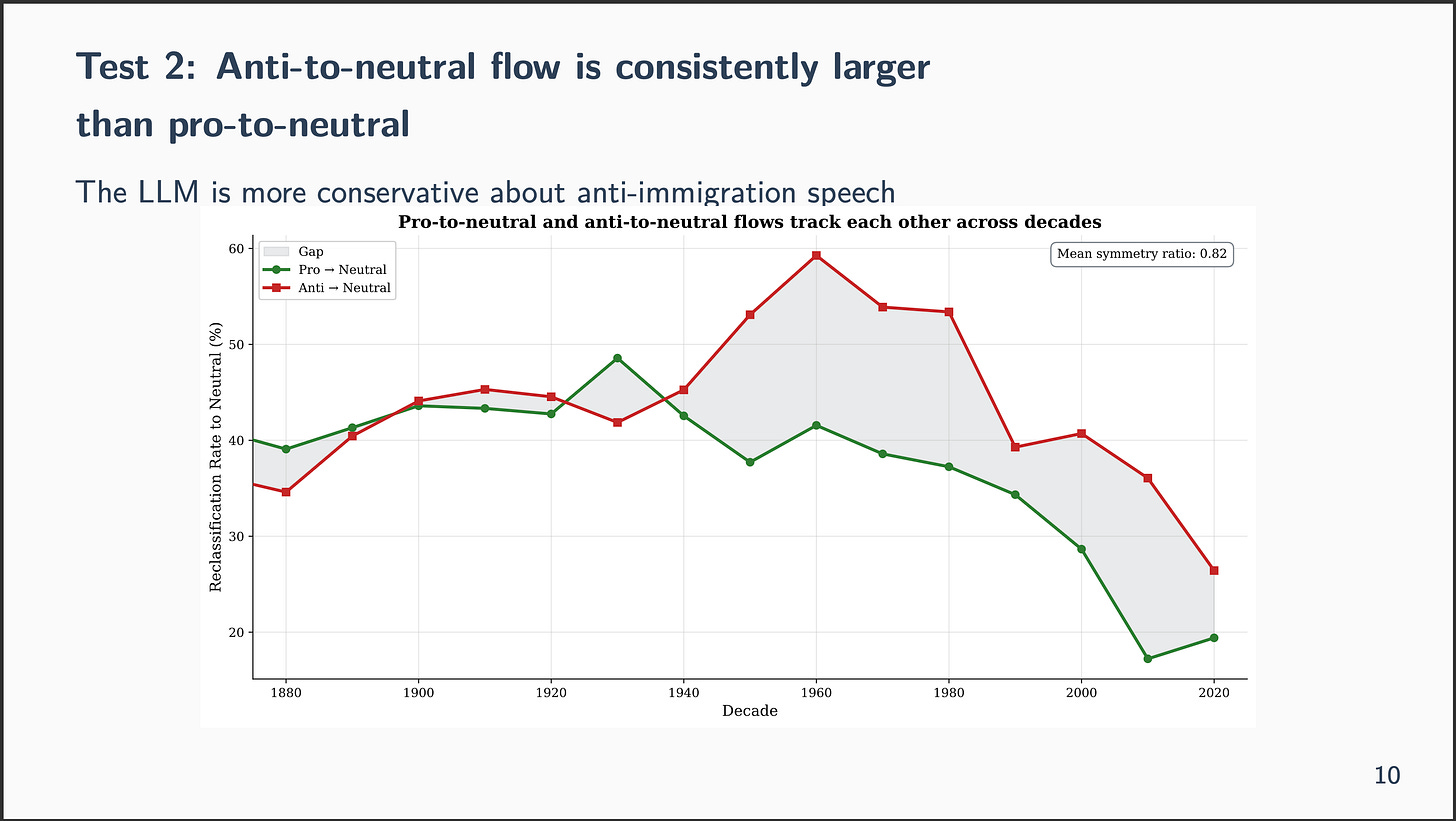

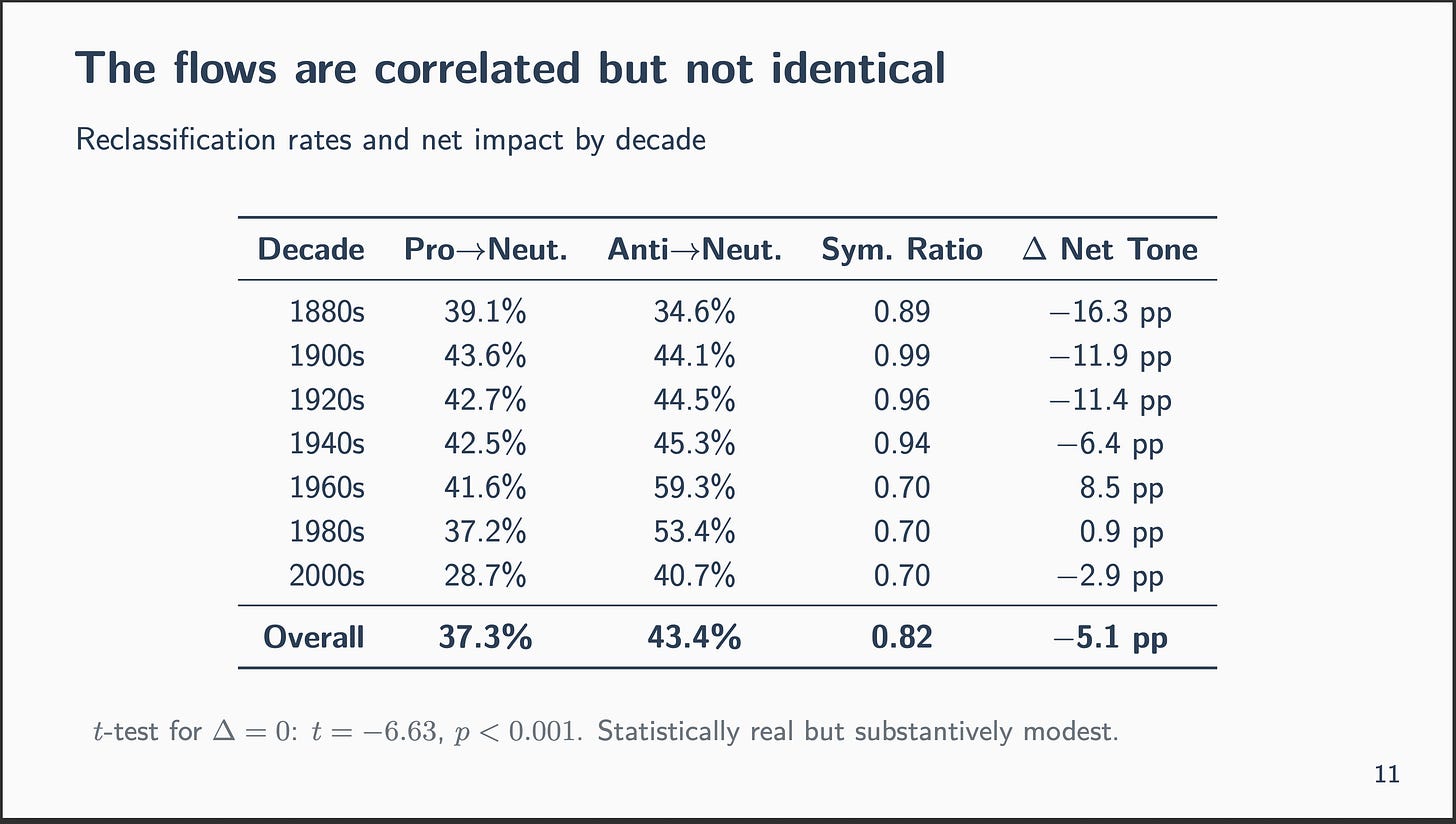

Here’s the theory I kept coming back to. The key measure in Card et al. is net tone — the percentage of pro-immigration speeches minus the percentage of anti-immigration speeches. It’s a difference. And when the LLM reclassifies, it’s overwhelmingly pulling speeches toward neutral from both sides. 33% of Pro goes to Neutral. 44% of Anti goes to Neutral. Direct flips between Pro and Anti are rare — only about 4-5%.

So think of it like two graders scoring essays as A, B, or C. They disagree on a third of the essays, but the class average is the same every semester. That only works if the disagreements cancel — if the strict grader downgrades borderline A’s to B’s and borderline C’s to B’s at roughly equal rates. The B pile grows. The average doesn’t move.

I had Claude Code build two formal tests. A one-sample t-test rejects perfect symmetry — the mean delta in net tone is about 5 percentage points, and the symmetry ratio is 0.82 rather than 1.0. The LLM pulls harder from Anti than from Pro. But 5 points is small relative to the 40-60 point partisan swings that define the story. The mechanism is asymmetric but correlated, and large-sample averaging absorbs what’s left.

The Thermometer

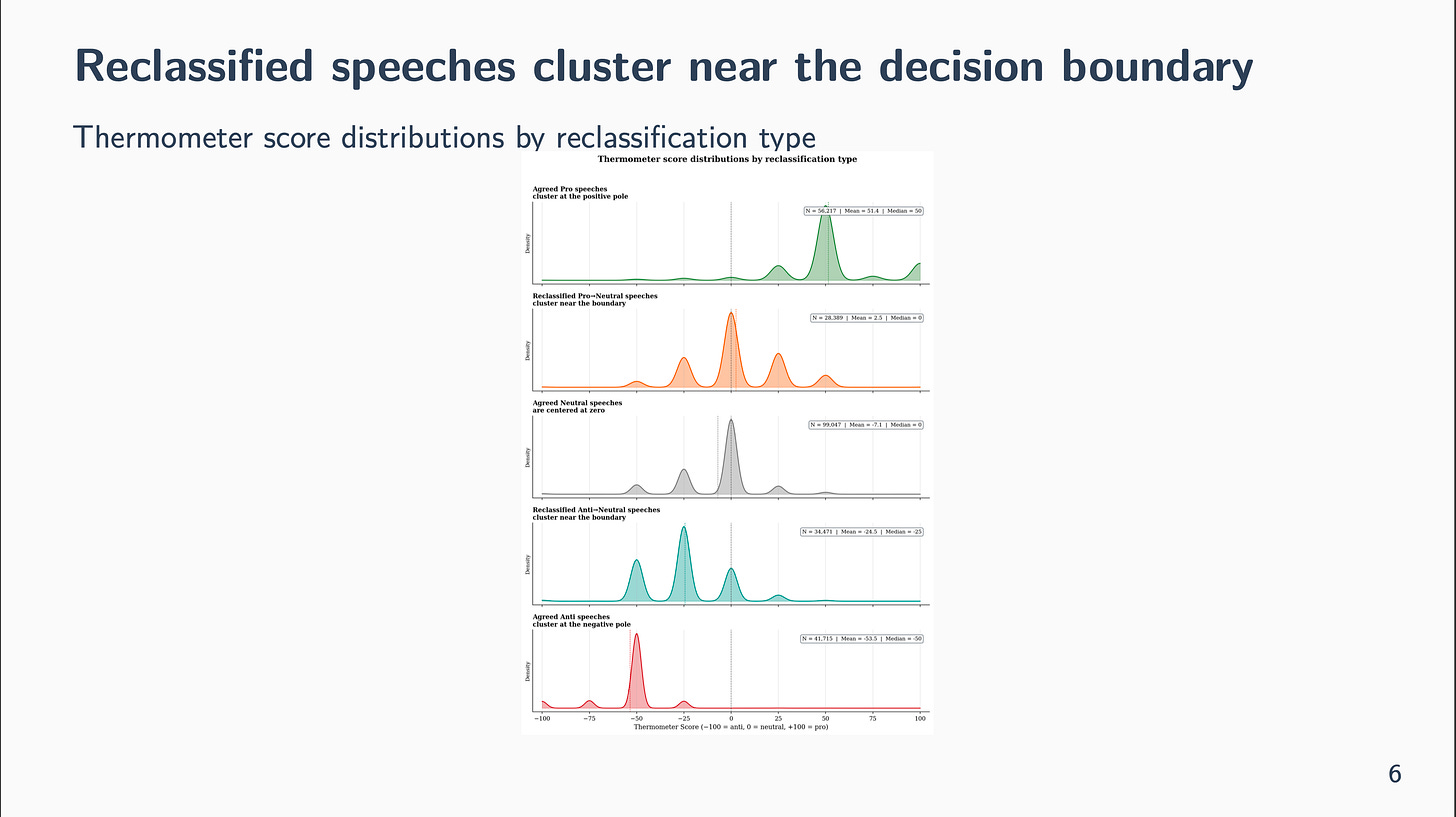

To push this further, I wanted to see where on the spectrum the reclassified speeches actually fall. So we sent all 305,000 speeches back to OpenAI — same speeches, same model — but this time asking for a continuous score from -100 (anti-immigration) to +100 (pro-immigration), with 0 as neutral.

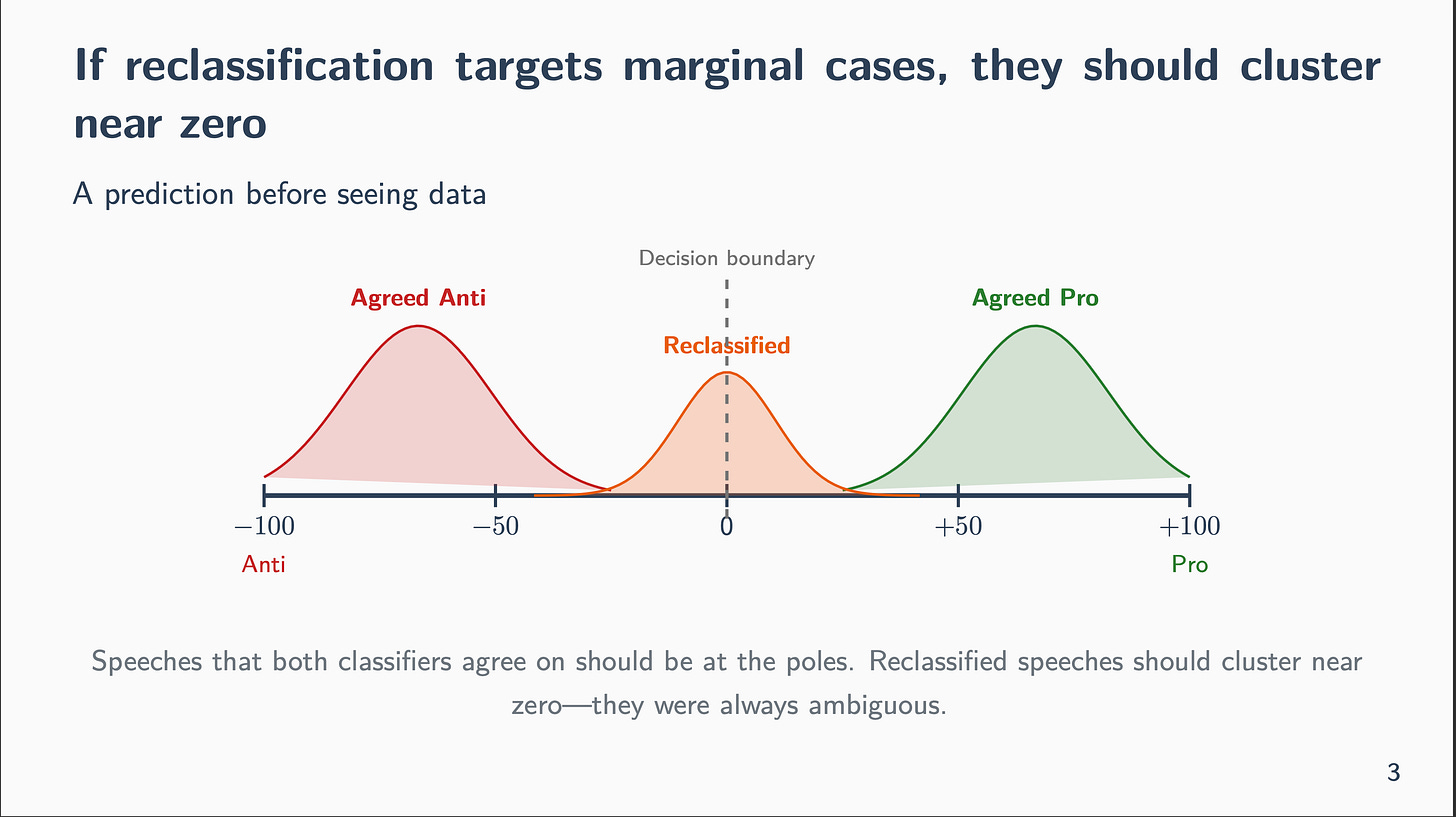

The prediction: if reclassification is really about marginal cases, the speeches that got reclassified should cluster near zero. They were always borderline. The LLM just called them differently.

Getting the data back from OpenAI was its own adventure. The batch submission kept hitting SSL errors around batch 17 — probably Dropbox syncing interfering with the uploads. Claude Code diagnosed this, added retry logic with exponential backoff, and pushed all 39 batches through. Another ~$11, another ~2.6 hours of processing time. The batch API continues to be absurdly cheap.

Once the results came back, we merged three datasets: the original RoBERTa labels, the LLM tripartite labels, and the new thermometer scores. Then we tested the hypothesis three ways.

First, the distributions. We plotted thermometer scores separately for speeches where the classifiers agreed versus speeches that got reclassified. The reclassified Pro-to-Neutral speeches cluster near zero from the right. The reclassified Anti-to-Neutral speeches cluster near zero from the left. The speeches where both classifiers agreed sit further out toward the poles. Exactly what the theory predicts.

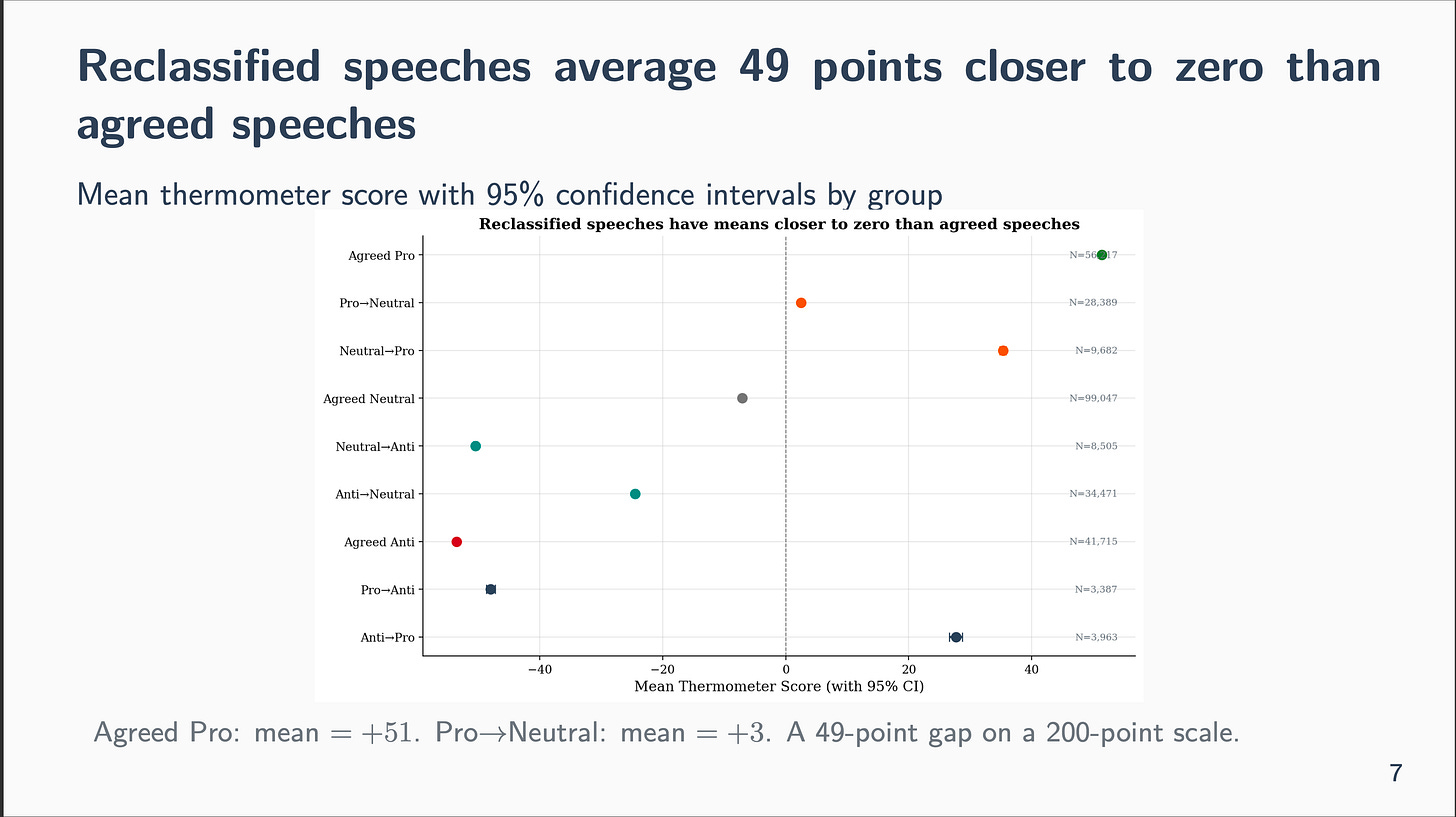

Second, the means. Reclassified speeches have thermometer scores dramatically closer to zero than agreed speeches. The marginal-cases story holds up quantitatively, not just visually.

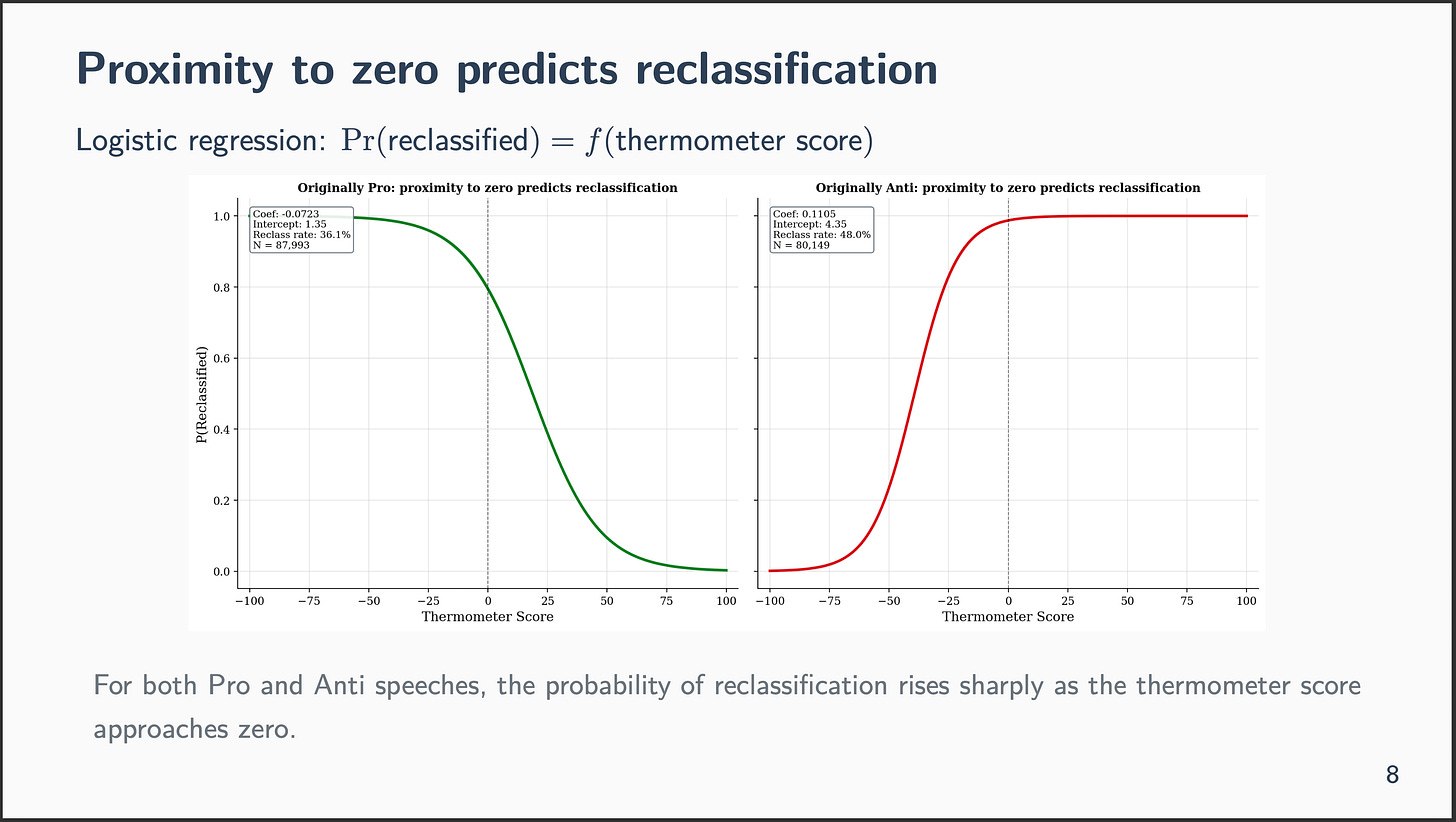

Third, and most formally: we ran logistic regressions asking whether proximity to zero on the thermometer predicts the probability of reclassification. It does. Speeches near the boundary are far more likely to get reclassified than speeches at the poles. The relationship is monotonic and strong.

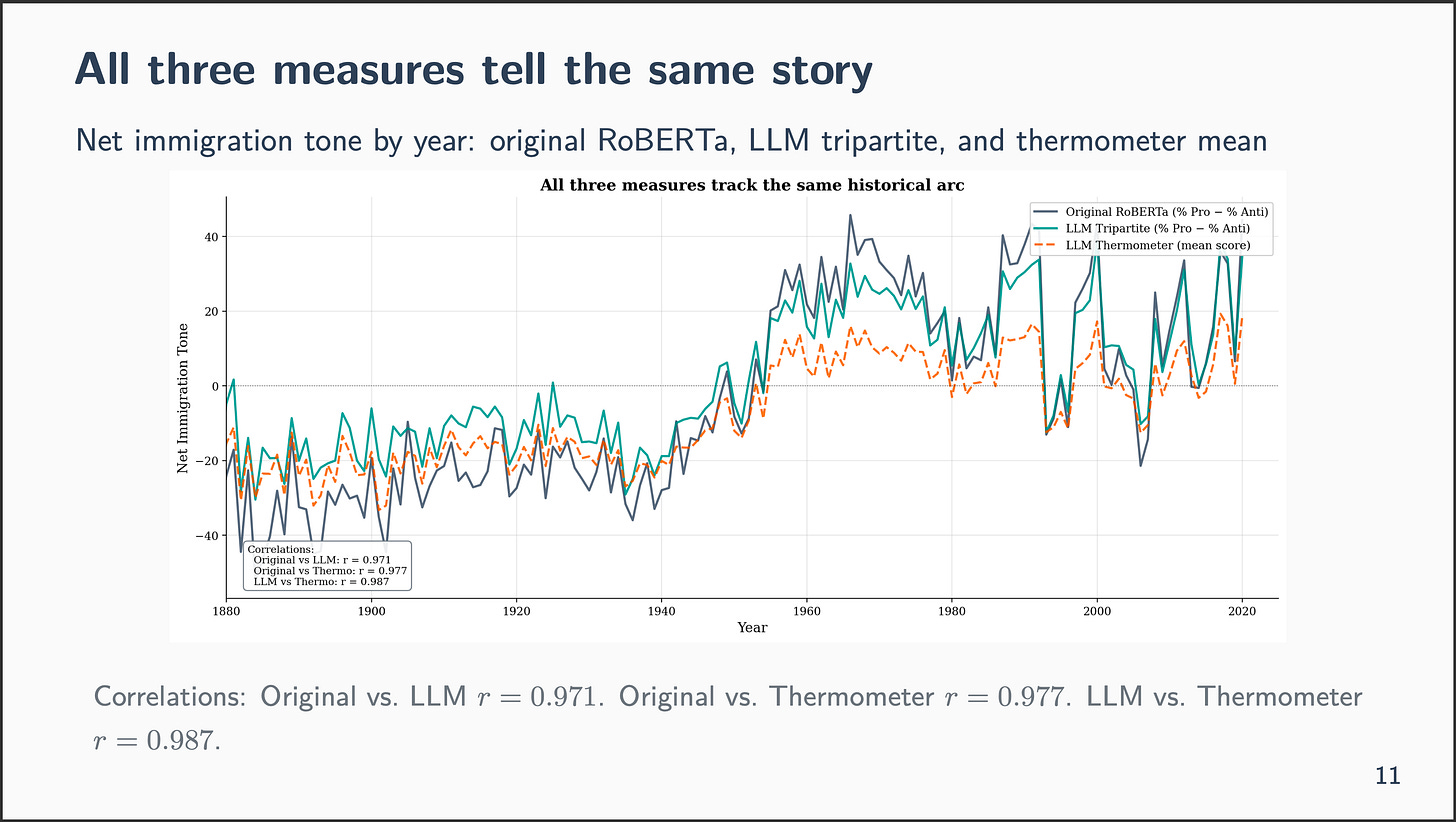

And here we see a summary of the trends for all three — the original RoBERTa model, the LLM tripartite reclassification from last week, and the new thermometer classification from today. Same thing. They all agree, even though RoBERTa used 7500 annotated (by students) speeches for its training, but I just did a one shot method and spent $10-11 per go at it using OpenAI’s batch requests which are 50% off if you submit in batches.

The Three-Body Problem

But here’s what I can’t stop thinking about. This cancellation mechanism has a very specific structure: two poles and a center. Pro and Anti are +1 and -1 on a one-dimensional scale, and Neutral is the absorbing middle. Losses from both poles wash toward the center, and because the measure is a difference, they cancel.

What happens with four categories? Or five? Or twenty? If there’s no single absorbing center, does the whole thing fall apart?

I called this the three-body problem — partly as a joke, partly because I think there’s something genuinely structural about having exactly three categories with a symmetric setup.

To test this, I had Claude Code — running in a separate terminal with --dangerously-skip-permissions — search online for publicly available datasets with 4+ human-annotated categories. It found four: AG News (4 categories), SST-5 sentiment (5 categories on an ordinal scale), 20 Newsgroups (20 categories), and DBpedia-14 (14 ontological categories). It downloaded all of them, wrote READMEs for each, and organized them in the project directory.

I haven’t run the analysis yet. That’s tomorrow. But the plan is to classify all four datasets with gpt-4o-mini, compare with the original human labels, and see whether aggregate distributions are preserved the way they were for the immigration speeches. If the three-category setup is special, we should see distribution preservation break down as the number of categories increases.

What’s Easy and What’s Hard with Claude Code

This series started as an experiment in what Claude Code can actually do. Three sessions in, I’m developing a clearer picture.

What’s easy now: writing analysis scripts that follow established patterns, submitting batch API jobs, generating publication-quality figures, building Beamer decks, managing file organization, and debugging infrastructure problems like SSL errors. Claude Code handles all of this faster than I could.

What’s still hard: the thinking. The conjecture about marginal cases — that was mine. The connection to the three-body problem — mine. The decision to use a thermometer to test it — mine. Jason’s question about temporal stability — his. Claude Code is extraordinary at executing ideas, but the ideas still have to come from somewhere.

The most productive workflow I’ve found is what I’d call conversational direction. I think out loud. Claude Code listens, proposes, executes. I steer. It builds. The dialogue is the thinking process.

What’s Next

Next week, after Valentine’s, I’ll run the external dataset analysis and see if the three-body hypothesis holds up. I’ll also build a proper deck for the thermometer results — following the rhetoric of decks principles I’ve been developing, with assertion titles, TikZ intuition diagrams, and beautiful figures.

If you want to see where this goes, stick around.

Thanks for following along. All Claude Code posts are free when they first go out, though everything goes behind the paywall eventually. Normally I flip a coin on what gets paywalled, but for Claude Code, every new post starts free. If you like this series, I hope you’ll consider becoming a paying subscriber — it’s only $5/month or $50/year, the minimum Substack allows.

This whole process is so useful for me to follow as a researcher in critical AI use, thank you Scott. Do you use Plan Mode for this work in Claude code, as I have found it to be amazing for keeping the decision making with me. Although I really wish I didn't have to keep hammering '2' to give it permissions to read secure websites. 😅