Claude Code 20: Faculty Adoption of AI, Decks and Folders, and Non-Trivial Security Risks

This post felt a bit melodramatic and/or hysterical, even as I wrote it. But I honestly believe every bit of this. I am posting it mainly so that anyone who wants the deck and/or the essay to help them articulate a point of view to administrators and employers can have it. I don’t know if it will help, but it’s at least something to consider. The .tex file for the deck is at the end also fwiw.

I recently gave a talk to a small group at Baylor about the needs of our university with respect to faculty use of AI. I talked to them under this rhetorical framing of “How to Encourage Adoption of AI Among Faculty”. You can find the deck here. I didn’t finish my typical habit of tweaking it until all the Tikz was perfect, and sort of don’t want to now, so it’s not perfectly beautiful the way I like it, but the ideas are there and I wanted to share them because I thought they were worth openly talking about and thinking about. The argument I make is fairly simple, and it’s come up here on the substack quite a lot, but here it is again and more or less coalescing into one single piece.

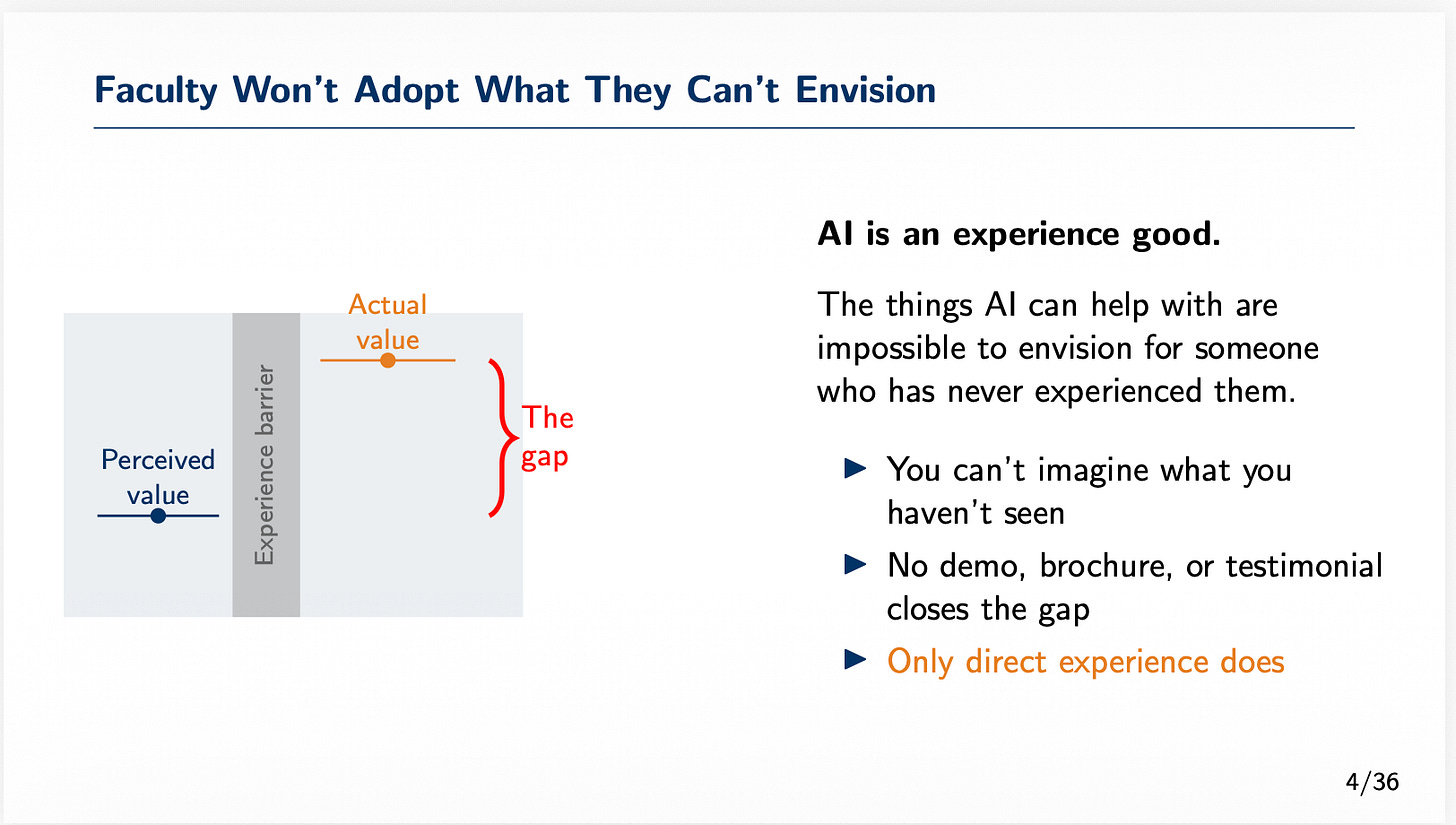

AI Agents are an experience good. What do I mean? I mean ex ante a person unfamiliar with using an AI agent will not be able to price their valuation of it. They cannot do so because they do not have a frame of reference, and the more extremely different it is, the less likely that frame of reference they attempt to use is even remotely accurate.

What might that frame of reference be? Oh I don’t know — maybe ChatGPT? Maybe this is just ChatGPT right? Or maybe this is like a Nintendo? Or is it a Sony Walkman? Like the modal academic not in computer science probably gives two rips to be honest. If they don’t know what an AI Agent is now, in February 2026, chances are they maybe weren’t even paying the $20/month for ChatGPT. Maybe they were using the free version. Is that crazy to imagine? No. It’s not crazy. It’s almost certainly the case. Most of those weekly users of ChatGPT are not paying for it.

Having a PhD does not mean you understand the value of AI, or that there is any value. It’s an experience good, its value must be experienced first subjectively, and then you’ll know over time through experimentation just what it’s usefulness is and therefore what your willingness to pay is. I made this pretty crappy picture but you get the point:

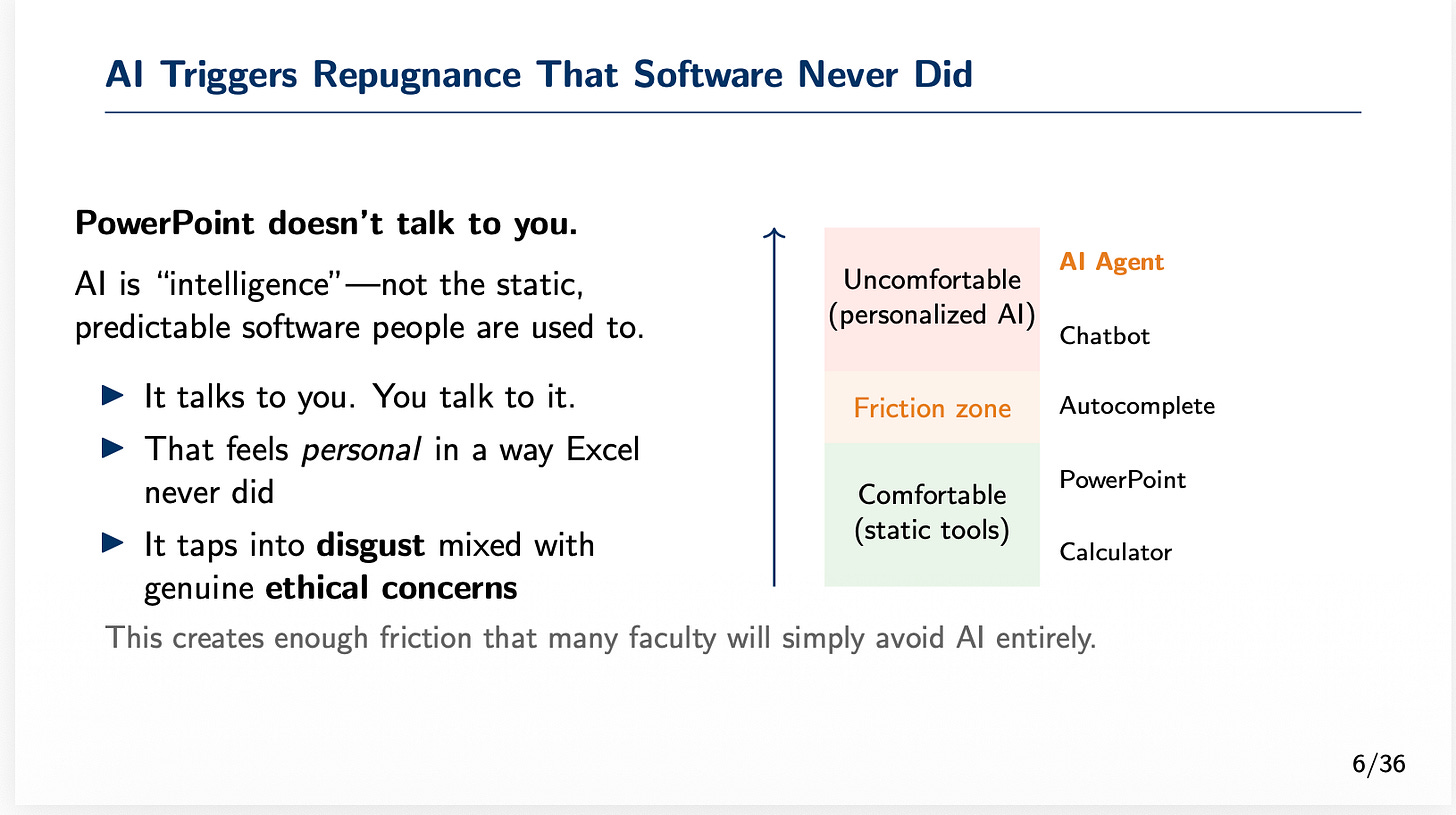

AI Triggers Repugnance. This is a different idea, and it’s one I’ve been toying with for now 3 years. I think artificial intelligence, specifically the large language models, are for many people repugnant. I mean they are morally offensive, not because of the labor substitution or the automation or the impact on the environment. A willingness to hold those ideas may in fact be endogenous to some deeper almost primal thing which is that this is technology that talks to me and that is so utterly reprehensible that I want nothing to do with it, nor do I want anyone else to use it.

This is kind of a “repugnance as a constraint on markets” idea or the cognition of disgust. It’s not bias per se — it’s wrapped up in psychology but also ethics and reasonable policy making, no doubt. But I am saying that I bet you that people have a different reaction to the fact that this software passes the Turing test so well. Excel could have many of the same impacts as ChatGPT and not trigger so much moral opposition. I think the way it insists on being personal and intimate as it answers our questions and performs the tasks we give it places it firmly in the uncanny valley, but I don’t think it really can exit that valley, at least not for many, as it is utterly alien, inhuman, pretending to be human, doing strange things to us and with us that we don’t understand, and we are all jaded about social media and phones anyway by now that it makes sense that there is so much caution, skepticism, and trepidation.

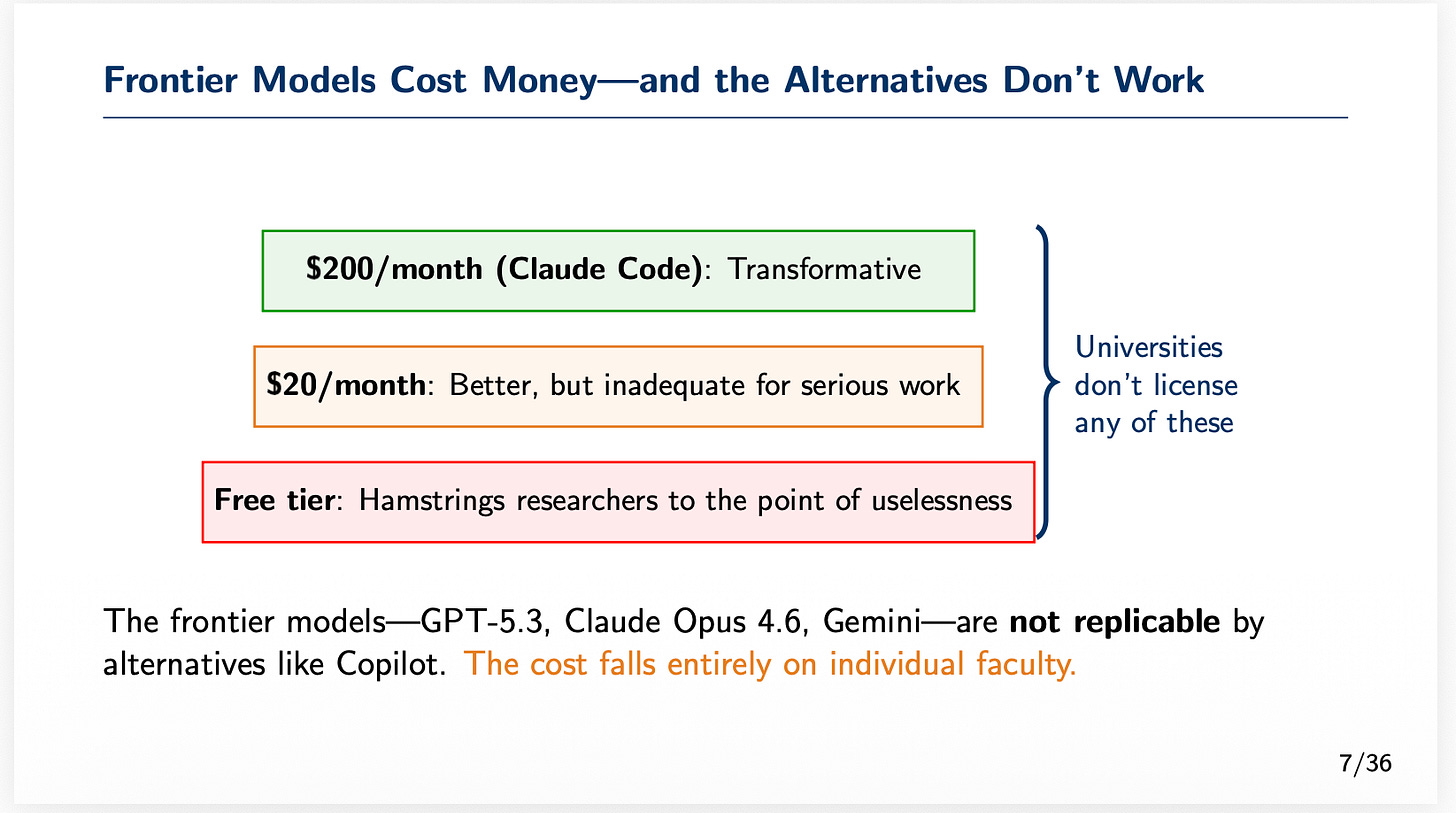

Frontier Models Are Expensive. So, here’s the problem. Most of our employers will not be paying for the very subscription tier that you need in order to be productive using Claude Code or some other equivalent AI frontier model. Unlike ChatGPT, where people could get by using the free version to do trivial tasks, that is impossible with Claude Code, even though there is a free version. There is not a task like that where Claude code is useful.

Once you experience what Claude Code can do, and you push it to do more, and you challenge it to do more and more hard things in your life, you cannot avoid the unfortunate fact which is that you have to have it.

Security Risks Are Likely Huge. The problem is, I don’t think your or my university employer will pay for it. They will not pay for it because the security risks are massive.

I remember once teaching this class on environmental economics in grad school. And the author was talking about pollution and smokestacks. But then he said something interesting — he said car drivers are like really bad, amateur smokestack operators. The firms at least are attempting to be efficient in the environment they’re in for the sake of profit maximization. But normal people driving their cars — they idle, they don’t care for them, they just pour poison into the air and in aggregate do a lot of damage.

I think most of us, truth be known, are like car drivers. We are amateur smokestack operators. We always think it’s going to be someone else who creates real security risks for the system, but probably it’s us. It’s like that thing where everybody believes they are above average. I think we probably think we are more sophisticated and less prone to falling victim to malicious attacks than we probably are.

Which if I’m right, means that the universities are in a jam. On the one hand, many of them are pushing (who knows why? Is it coming from the regents? Donors?) for faculty to adopt AI. But for what? And why? What’s the value? What’s the use? What’s inappropriate use?

Well, the really valuable use is high average reward, high variance. It’s like adopting a wild bull and trying to teach it to be civilized. If all you use AI for is writing your emails, it can do no damage and it has no real value. But if you can harness Claude Code towards improving the quality of your teaching while simultaneously helping you make real discoveries, real progress on your research — well that is not a free lunch. Those are not free. That is going to cost — I don’t mean cost money, but it will cost money. I mean there will be security risks. There will be vulnerabilities exposed.

Productivity Enhancements to Split the Market. So now consider this. What if the productivity gains leads to an increase in supply but not an increase in demand. Larry Katz does not expand the number of issues at the QJE, nor do any of the other journals for that matter. The slots stay the same. But the number of papers grow. The number of jobs stay the same in academia. In fact they fall because of falling fertility that is slamming as we speak at the door of the 2026 entering class and thereafter and the supply side shocks to research universities everywhere from dried up federal grants and sharp reductions in overhead.

So now consider this. Someone else gets something that very well will increase the number of papers written. Some will write worse papers than the average, like what Reimers and Waldfogel found with other creative outputs (e.g., books). But this will widen the distribution, and definitely increase the noise if it has no effect on the best papers. So what does a noisier process that the journals face mean for you? Does it help you? No, it does not. We are all about to face a very strong wind. Maybe the strongest one we’ve seen before.

Experience and Subsidies Are Necessary For Adoption. So this is the problem. The problem is that most of us cannot afford not to adopt. Most of us do not have the luxury of not adopting. We have families, we have dreams. We have papers inside us that we want out, and yet we are now competing with robots to write them. Which is better for society? What if the robots write better papers? What if robots write markdowns that are better than our best papers?

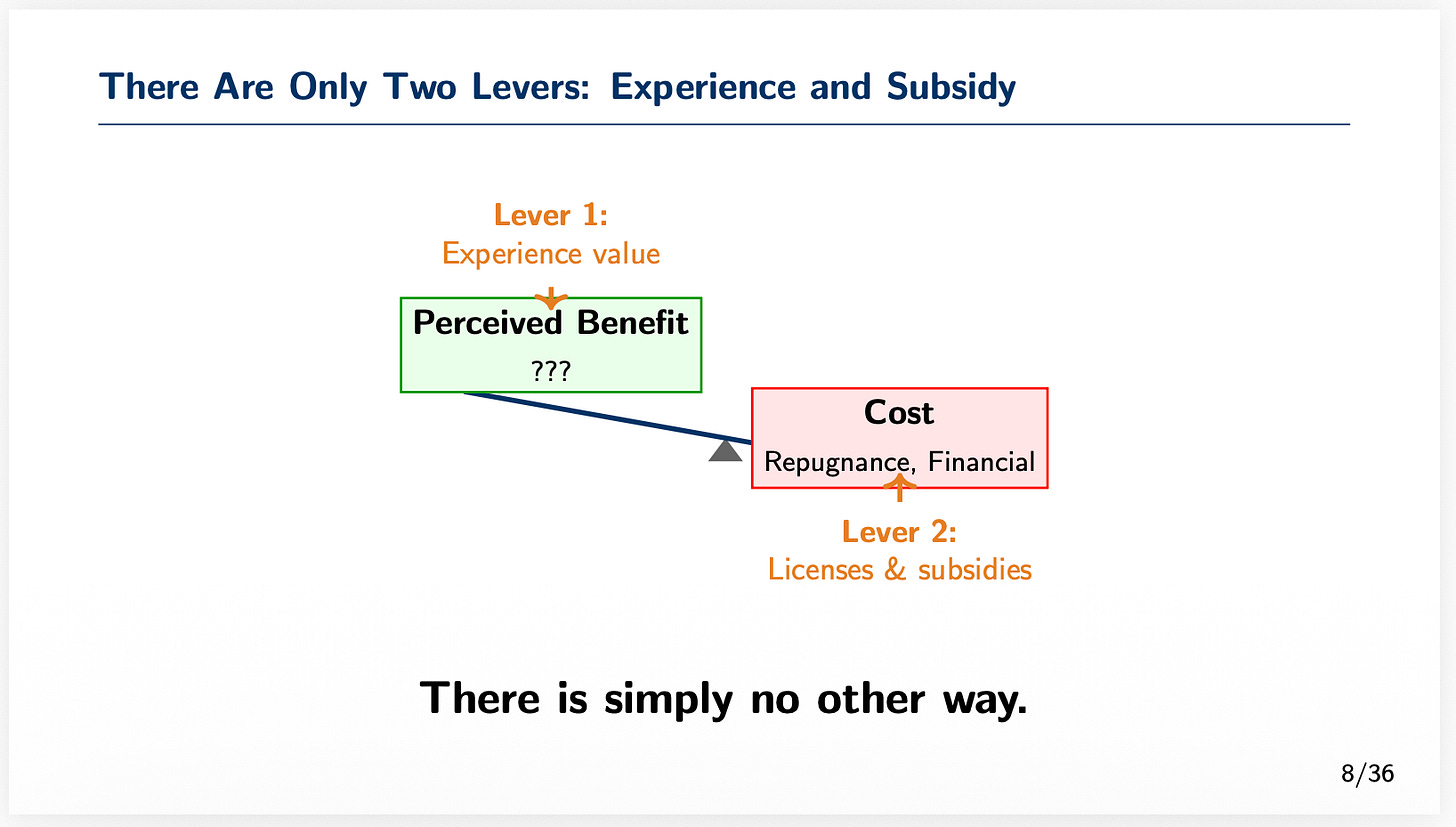

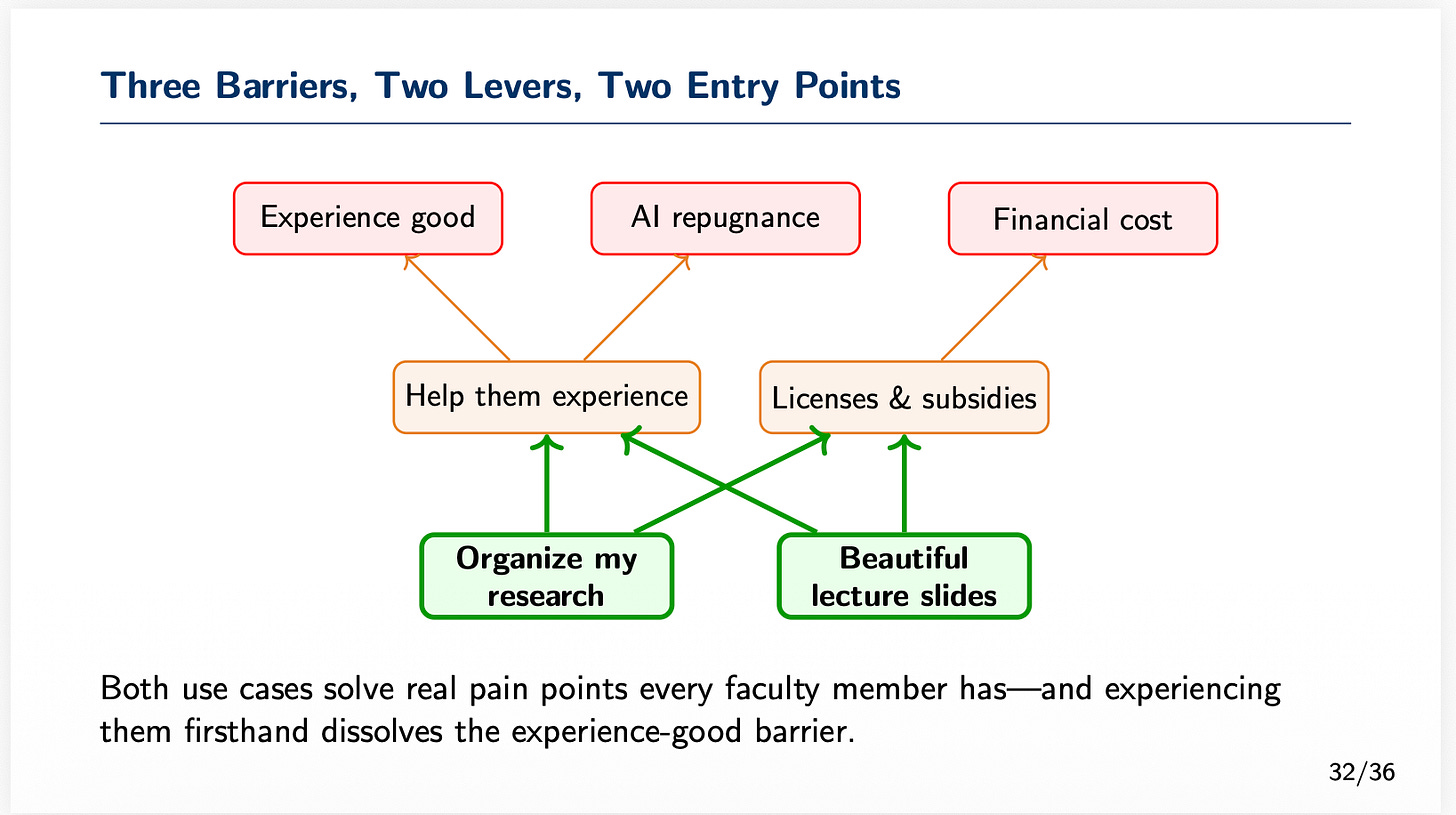

And yet the fact is that still there is this gap between the perceived value of AI Agents for research and teaching, the actual value which is unknown until you use it a lot, and the cost. So universities can only get adoption to happen, outside of self selection, through two levers — they must help faculty experience the value such that the perceived benefit and the actual benefit become the same thing. And they must lower the cost, which is definitely financial and probably can be moderated, but also ethical/psychological and that frankly probably cannot.

So I’ll take them in turn. What can the universities focus on to help raise the perceived benefit to something equalling the actual value? In short, it must be something that is time intensive, highly valuable, and something that — no offense — the average faculty member is terrible at.

The Making of Decks is the First Use Case. So I’ve said this before, but I just wanted to have a reason to share the deck and put it all together in one post. I earnestly think that if administrators are wanting faculty to adopt AI, they must focus on AI agents — specifically Claude Code in my opinion — and get faculty to make their classroom decks using it. If the goal is adoption, that’s what you do to make faculty adopt it.

The reason I say this is simple. Decks are extremely important objects we use for teaching. Some textbooks will make the decks for us and the ones they make basically are terrible, but we accept that because most faculty are not great at making decks just like they are not great at making exams or homework assignments. Which is also why the textbook companies bundle those too.

Additionally, truly phenomenal decks take time even if you are good at them, which I would say describes probably 1 in a hundred faculty (and definitely not this faculty). Most use default settings. It’s why every talk using beamer looks identical to another.

And just making a mediocre deck takes hours and hours of time. Just being bad at it takes so long! Just making a terrible terrible deck is so time consuming. It’s just awful.

And yet they are so important. They persist, they are passed around, they are studied. They are studied probably more than the books themselves. They are objects of learning. They give confidence to the teacher. The teacher knows where they are at all times. It helps them communicate to the class. It helps the students learn.

When done well.

What if there existed a technology that you could use to turn your lectures into the best decks ever made? What if they could make suitable replacements of the decks now at no time use at all, and any additional time use spent on making those decks only made the decks better. What if there existed a technology where you made decks and the time you spent making the decks you were somehow spending practicing your lecture, and learning the material at a deeper level at the same time. And what if you had a technology that did that and yet somehow still left you with 10 hours a week.

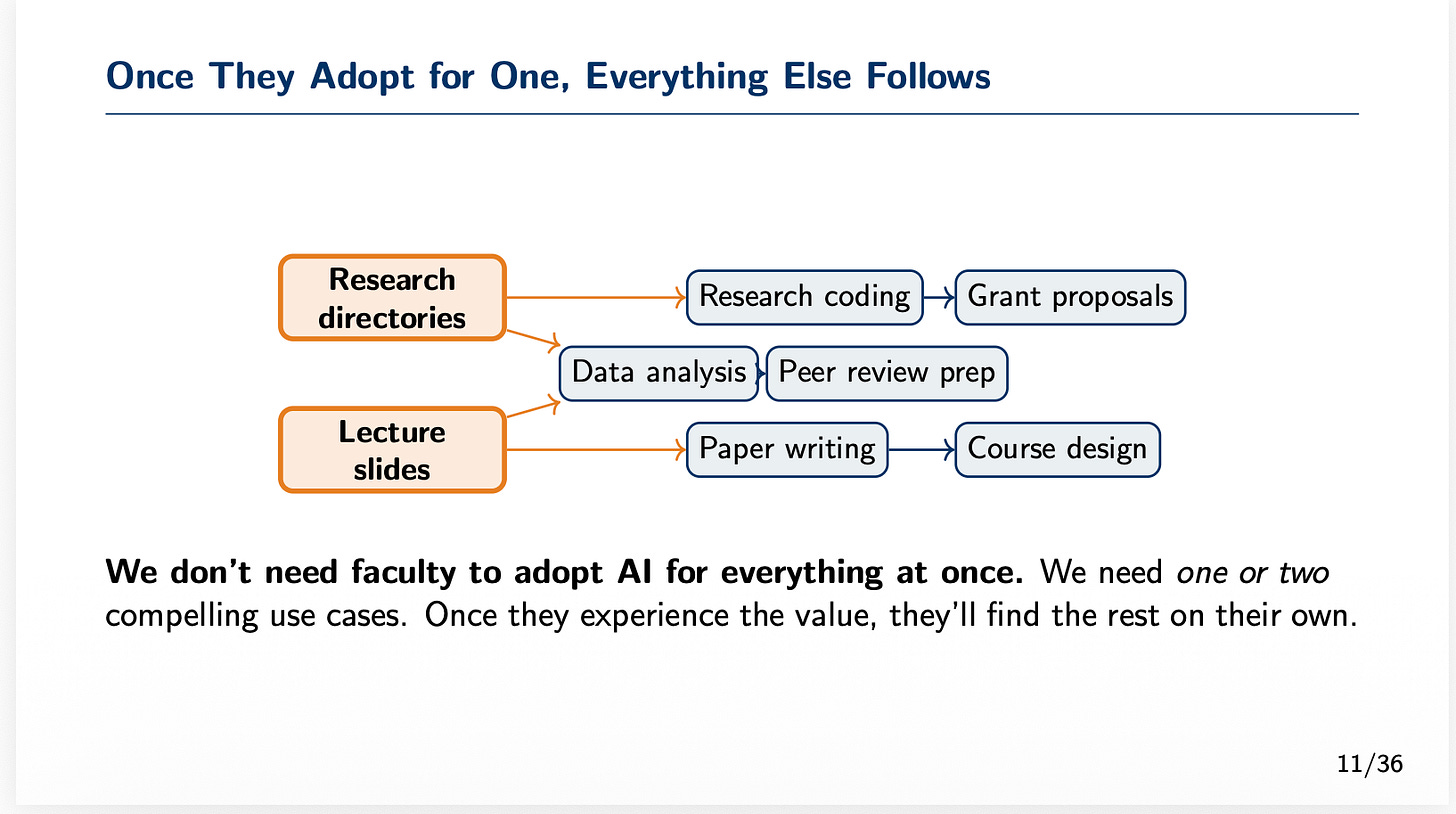

That and that alone is all that you need to get faculty to adopt AI. That’s it. You don’t need to workshops showing 50 different use cases, new people coming in each week. You just need them to make their lecture slides using Claude Code and they will then use it for everything else. It will fall like dominos.

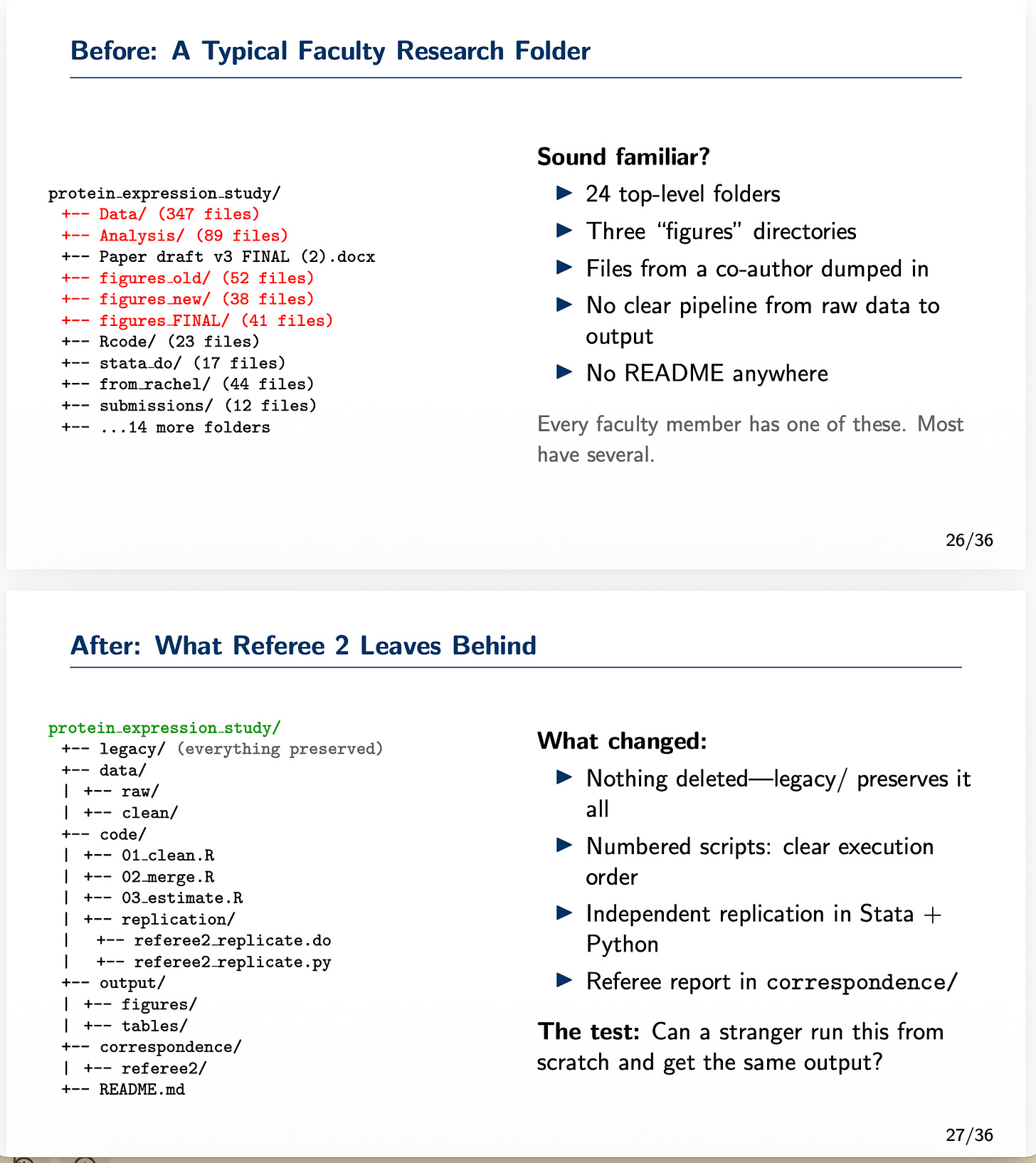

Fix Our Research Directories. The other use case I think I could see, but this one is a bit riskier for sure, is to have Claude Code go into our research and clean up the directories.

Why do I say “directories” and “research” as if they’re the same thing? Because — and here I am being provocative — what is research exactly? Sure it’s the creation of knowledge. It’s time spent on creative effort and discovery. 100%. But you know what else research is, even more basic than that?

Research is a collection of folders on a computer.

And that is why I think Claude Code is actually useful for more than just applied quantitative social scientists. Who on faculty is not carrying around research in dropbox folders? I think whoever that person is does not need a dropbox subscription, and is probably not a candidate for experiencing the benefits of AI Agents research. But if you have a project, and that project lives in folders on a computer, then you will benefit from Claude Code — even if I, some measly economist, cannot articulate what it will be.

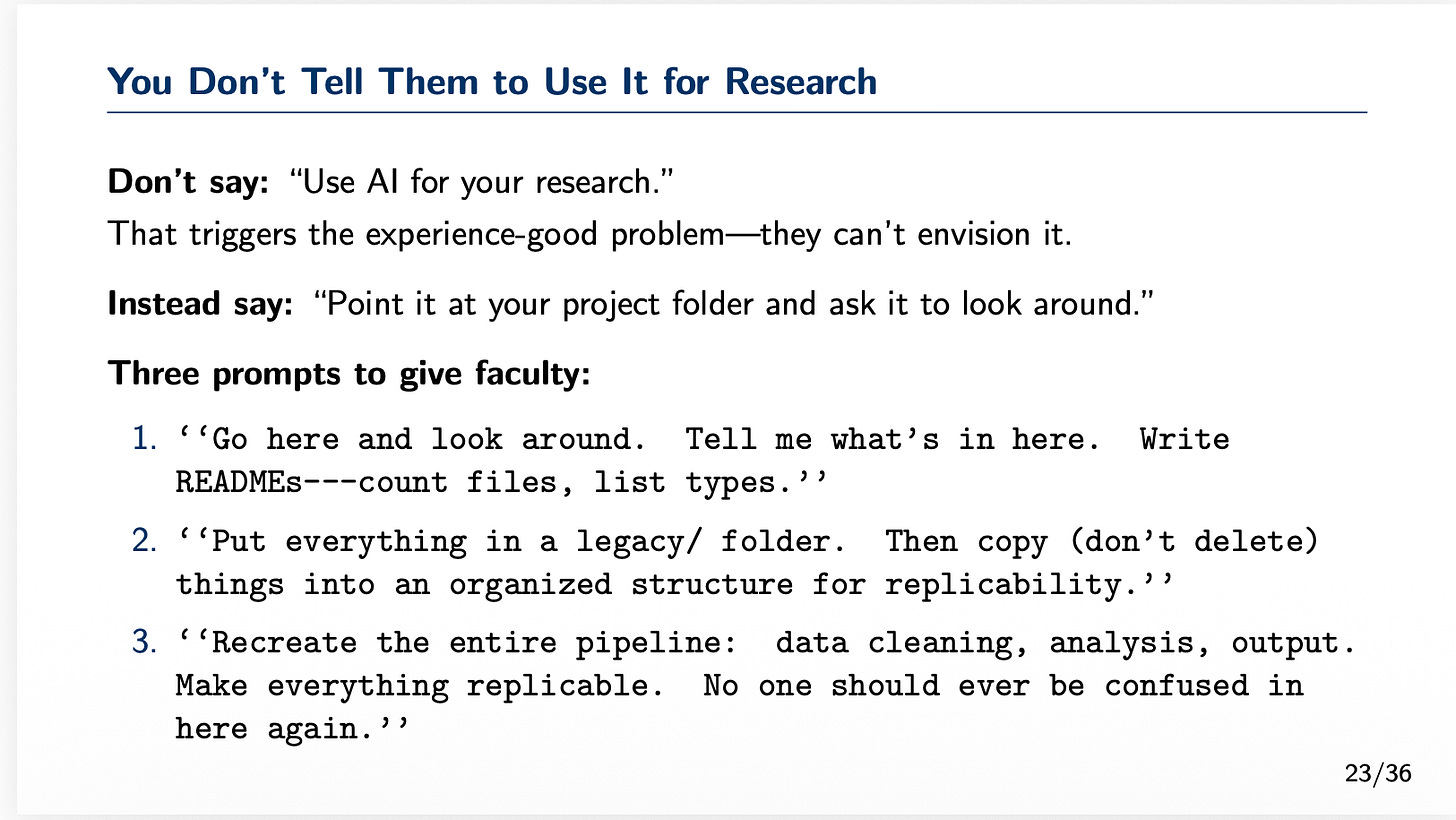

So, one of the other things that you can do to help a faculty member adopt AI, which will require minimizing the gap between perceived value and actual value via experience, is to simply give that faculty this prompt:

Tell them to just type that in. Install Claude as the desktop app. Point it to an old directory of theirs. If you really want to freak them out — tell them to point it at their dissertation. Tell them to copy and paste that in. Watch when something like this happens.

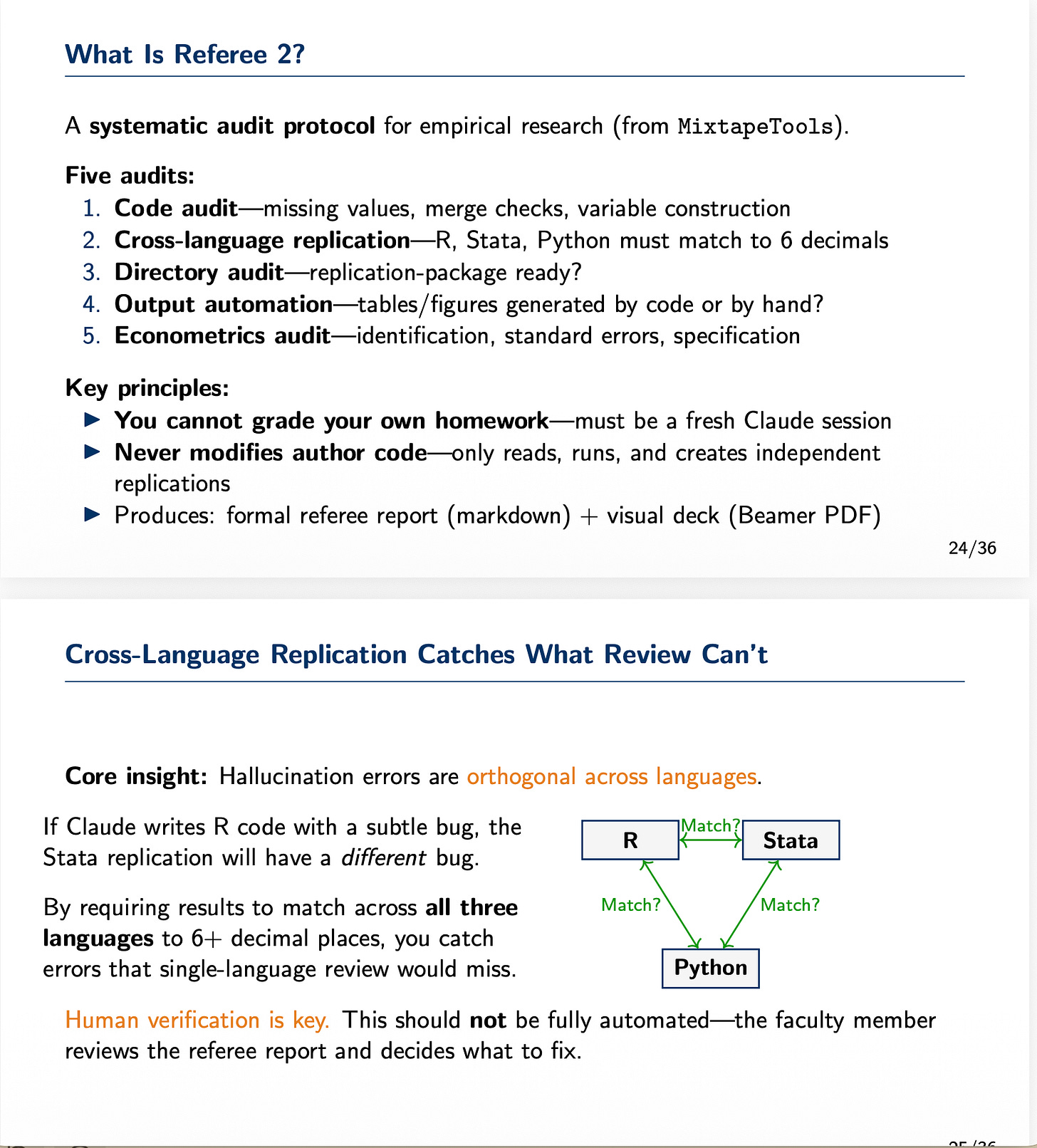

Or have them audit code. Tell them to use my “referee2” code audit persona at my MixtapeTools repo to audit their old code. Watch it as it not only audits the code in the language you wrote it in, but replicates it in python and R too, just to confirm that no mistakes were made anywhere. Then writes a referee report. Then have it in a different manifestation do that report for you.

Now this is just the start of course. The real work going forward is not in automating all aspects of research. The real work for us probably is figuring out how in the world to verify all this ourselves. We have to be 100% certain of everything that was done, if it was done. Some will feel more comfortable with some things more than others, and it is not my place on this substack to dictate that I know where that line is or should be for all people. I have an opinion, but I’m not currently ready to share it. I just am saying that whoever figures out how to reduce errors to zero will probably be the highest marginal product player in this next phase.

Barriers, Levers and High Variance Costs. But here’s the rub. If you want to have faculty adopt AI for non-trivial tasks, you will need to help them experience what it can do. And you must reduce the costs. It’s possible experiencing it could increase AI repugnance, or it could decrease it. But it will probably enable a person to better value it, so long as they are willing to use it intensively for non-trivial tasks.

But then there is the financial costs. They are not trivial. To do anything remotely useful with Claude Code or some other AI agent will cost $100 to $200/month per person unless the university is able to enter into licensing agreements with firms like OpenAI, Google, or Anthropic. I really think no one should take seriously the claim that there is a fourth option. And of those three, it is my opinion it’s probably Claude Code. Which means there will need to be subsidies and licenses provided, just like there are computers provided to faculty.

And yet, anything that can happen, will happen, with enough trials. Which means here that if there is even the smallest risk of Claude Code doing something because you had faculty messing around in the terminal, and they don’t have a clue what they are doing, then you multiply that over a thousand or more faculty and even more students, and those horrible thick tail events will happen — the good ones and the bad ones. So this has to be solved and the ones that wait to solve it, will lose.

Conclusion. Feel free to use that deck. You can also use this .tex file. You do not need to credit me. You do not need to ask me for permission to use it. If you think this is helpful, you can use it. I do think these are important and I think that others need to find a way to help get this information to administrators and dept chairs.

But, I am not personally optimistic that this is going to happen, or frankly even that it should happen. I think the security risks are non trivial, and so I have bought my own laptop, and I have my own subscription. I think I just have decided long ago that I will invest in myself, and I don’t need others to do it on my behalf. This is my career, this is my life, I am the one who is responsible for my classes, for teaching my students, for growing as a professor, for being as creative as I feel I need to be.

Thanks, Scott! I have been using CC for about 1 month now, and I'm still on the $20/mo plan and it's working out well so far. We'll see how long I last...

This is an interesting take and rings mostly true. I admit to being a skeptic of AI, not so much as a tool with obvious uses but as a seductive means to offload the responsibility we have as researchers to think. It's too tempting to offload intellectual struggle (for those who view the struggle as an obstacle rather than the very point of the thing), and so, like you said, there are security risks in the intellectual sense. (For my part, I love to code, so I view my becoming a fossil in that department with a lot of regret and resentment. So it goes.)

I think you were hinting at these other risks but didn't mention them explicitly. Researchers are generally pretty bad at computing security, and I suspect we're already swimming in an ocean of FERPA, HIPAA, and other violations attributable to people uploading that stuff through AI on the web. This is a big practical obstacle for people in the life and social sciences who deal with actual participant data.