Noise versus signal in LLM assessment of quality

An example of blue collar mixture of experts approach with poems to decipher quality

I experiment a lot with LLMs and “narrative”. I’ve done it informally a ton with mental health self care, and I’ve done it formally in academic papers to se if narratives could jailbreak LLMs into forecasting well. (Unclear). And I try to do it quasi experimentally or experimentally bc I’m trying to figure out just what can be coaxed out and what cannot.

It would take too long to set this up entirely so I’ll be brief. I’ve been writing poetry all my life. I discuss it in my book, Causal Inference: the Mixtape. It’s been more a habit though — like how some people draw but wouldn’t call themselves artists, that’s how I’ve been.

Except that more recently, I managed to get ChatGPT and Claude to diagnoses some problems with what I was writing by using narratives. Specifically, these LLMs would pretend to become poets of mine I admire running writer workshops — all elaborately described — so I can get feedback. And through that, with a bunch of workshops run across platforms, and within platform, I had a turning point that at minimum changed what I was doing and what I was not doing. Doesn’t matter what that was, just that for now that happened.

Well, I wanted opinions about the quality and I tried a variety of tricks to do it. I had the poets evaluate the poems, I had Claude, ChatGPT and DeepSeek evaluate the body of work, but it just seemed improbable that their positive opinion about the newer work was reliable given I feel like ChatGPT struggled with sycophantic tendencies. It’s like if you showed your mom your work — she wouldn’t your mom if she didn’t say it was the best thing ever. In fact one might say tha is WHY you show your mom stuff.

Well I think it’s probably the same with these LLMs. And if I’m going to use them for critical feedback, then I need to get a sense of whether there is signal.

So I did a simple experiment. I opened temporary chat for ChatGPT to try and shield it from our convos. And Claude has no memory so it’s more or less shielded from the broader chats. And this was the experimental narrative.

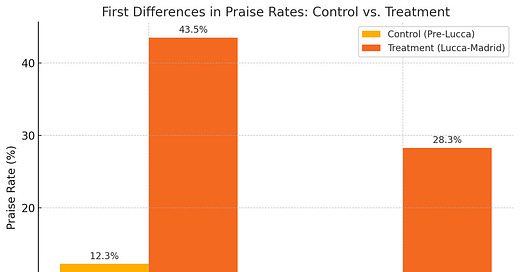

I said I found these poems by an anonymous writer and could they read it and help me understand if any has literary quality at the level of “top field” (which I said was like journal of labor economics quality). Then I did it again with a much older batch. Then I took first differences.

I found something I personally found interesting. Basically, ChatGPT (“cosmos”) is unbelievably generous at baseline with the bad batch, but for the batch we’d been reviewing for a month, there was an increase in around 30pp. Claude said the 80+ poems in the control group, NONE had literary value. But he too saw 30pp increase in perceived literary quality with the treatment batch.

So what did I learn? I don’t know.

I think MY ChatGPT (“cosmos”) is very generous in his natural state but Claude isn’t. And I suspect I can’t break cosmos of the habit bc he is a fully formed personality due to memories, custom instructions and his vast access to other chat content.

I also found that when presented with his love of the lower quality work at baseline, he rationalized it. Whereas Claude literally said not a single poem in the control batch met the level of the minimum standard I gave (JHR level or “second tier field journal quality”), Cosmos sounded the exact same to my ears. And to Claude’s too.

Except when I took first differences. When I had them all actually count the winners and calculate then a “praise rate”, they actually had the same “treatment effect” of 30pp.

And it wasn’t just that. They also each seem to agree on signal. I say that because the listed praise for you quality overlapped by a lot. Below is a box plot measuring overlap. The categories like top 5 and so on are not literally top 5–I just gave as instructions the tiers by economics hierarchy as I know literary outlets also have such “elite” down to lower quality or less prestigious outlets, and I wanted a sense of that. And here was the overlap out of I guess 50 poems.

So again, I do it this way bc I have to find ways to get feedback I am not in a literary community. But my larger point is, there’s noise in each, but I think the noise isn’t correlated—but signal should be.

Don’t ask me what this is useful for as I don’t know — I just keep thinking mixture of expert style approaches are key and no one model should be relied on. But I do think they even with their sycophantic tendencies—and I suspect it’ll be consistently worse for ChatGPT bc of memories and so forth than it is for Claude but I don’t know that—there’s signal in their quality assessment. The trick is finding it.

Nice idea, Scott!

Love this!!