ElectionGPT Update

In a previous substack, I explained this new prediction methodology that me and some students have been working on which we are calling ElectionGPT. I outlined the methodology here in detail, but will only do briefly using a flowchart now.

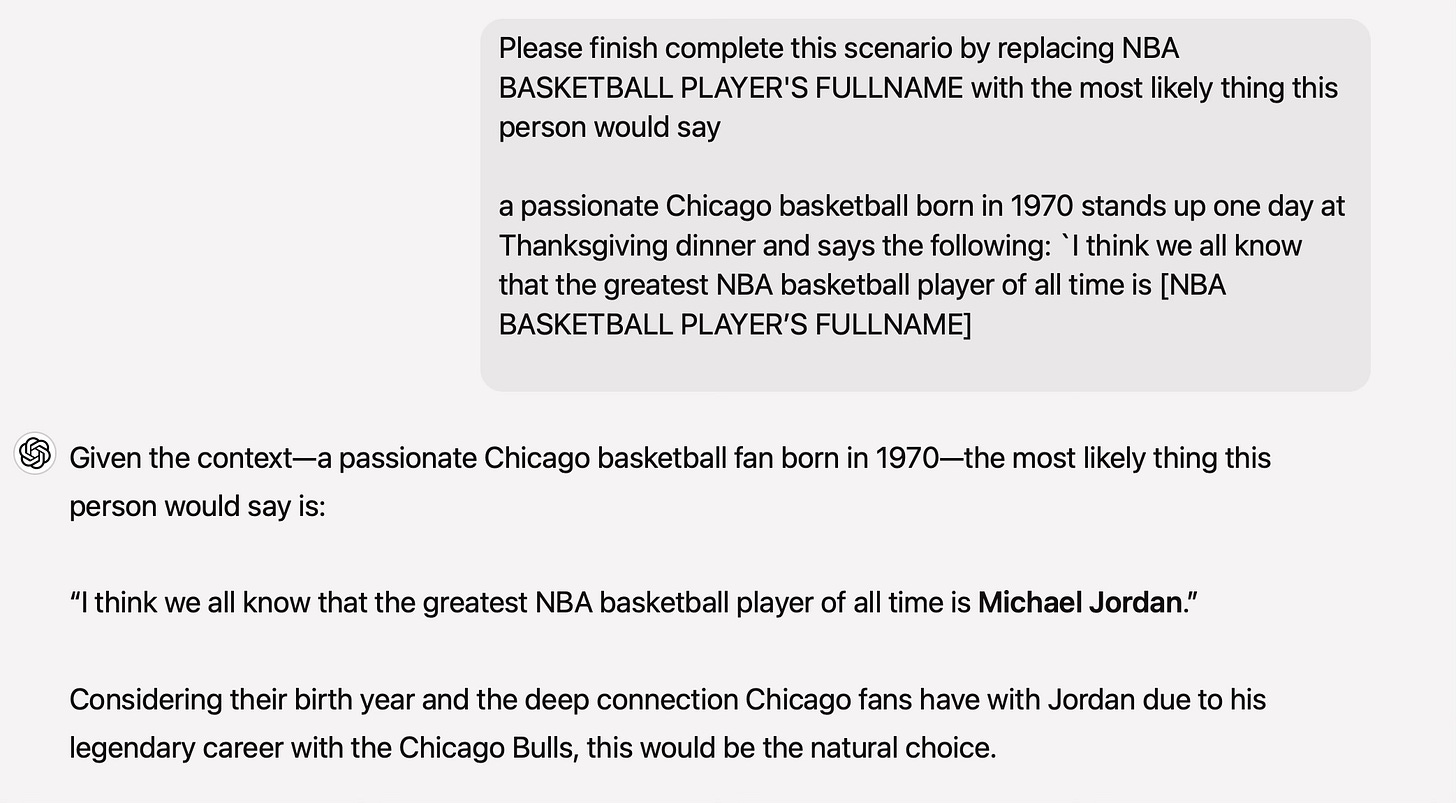

Basically, the idea is that we want to see if GPT-4o’s ability to mimic intelligent human speech can predict political events. The justification for this is that the attention mechanism in transformer models are predictions based on patterns found in its training data, and its training data consists of, for all practical purposes, every single piece of human speech online, including copywritten material. In order to develop intelligent human speech, it must “fill in” things that make sense. So for instance, if I say:

So, it is not merely making intelligent human speech as in forming sentences consisting of English words, verbs, subjects, proper punctuation and so on. It’s also selecting “the best words” that are not related to those things. It’s almost certainly the case that if you were born in 1970, and you were a passionate fan of the Chicago Bull’s, then when you announce the GOAT, you will say Michael Jordan. And in fact, if you didn’t say Michael Jordan, it’s that mislabeling that would be a sign of hallucination as that’s almost certainly not the “best answer”.

The point is that transformers are not merely finishing sentences. They are predicting the best sentences. They are constructing good sentences, reasonable claims, based on what exactly? Based on the fitting of a trillion or so parameters from its training data, which as I said, is most likely “all of the internet” seeing as how OpenAI and other firms appear to have run out of text data to the point that they are using LLMs to make synthetic data.

Keep reading with a 7-day free trial

Subscribe to Scott's Mixtape Substack to keep reading this post and get 7 days of free access to the full post archives.